About

记录了一些代码阅读和读书笔记.

PyTorch

相关缩写

C10 = Caffe Tensor Library(Core Tensor Library)存在这两种说法吧

aten = a tensor library

THP = TorcH Python

TH = TorcH

THC = TorcH Cuda

THCS = TorcH Cuda Sparse

THCUNN = TorcH Cuda Neural Network

THD = TorcH Distributed

THNN = TorcH Neural Network

TH = TorcH Sparse

tch-rs

tensor 相关结构之间关系

autograd

梯度反向传播

requires_grad 这个具有传染性, grad_fn

>> t1 = torch.randn((3,3), requires_grad=True);

>> t2 = 3 * t2;

>> t2.requires_grad

True

>>> t2.grad_fn

<MulBackward0 object at 0x100df4f70>

torch.nn.Autograd.Function class

toch/autograd/function.py:222

# mypy doesn't understand `with_metaclass` from torch._six

class Function(with_metaclass(FunctionMeta, _C._FunctionBase, FunctionCtx, _HookMixin)): # type: ignore[misc]

r"""Base class to create custom `autograd.Function`

To create a custom `autograd.Function`, subclass this class and implement

the :meth:`forward` and :meth:`backward` static methods. Then, to use your custom

op in the forward pass, call the class method ``apply``. Do not call

:meth:`forward` directly.

To ensure correctness and best performance, make sure you are calling the

correct methods on ``ctx`` and validating your backward function using

:func:`torch.autograd.gradcheck`.

See :ref:`extending-autograd` for more details on how to use this class.

最主要的两个方法,forward 和backward

gradient that is backpropagated to f from the layers in front of it multiplied by the local gradient of the output of f with respect to it's inputs.

向自己的每个input 反向传播。

def backward (incoming_gradients):

self.Tensor.grad = incoming_gradients

for inp in self.inputs:

if inp.grad_fn is not None:

new_incoming_gradients = //

incoming_gradient * local_grad(self.Tensor, inp)

inp.grad_fn.backward(new_incoming_gradients)

else:

pass

学习资料

- PyTorch 101, Part 1: Understanding Graphs, Automatic Differentiation and Autograd

- Implementing word2vec in PyTorch (skip-gram model)

- The Ultimate Guide To PyTorch

《Pytorch Pocket Reference》 笔记

Tensor

tensor相关属性和方法

import torch

x = torch.tensor([[1.0,2], [3,4]], requires_grad=True)

print(x)

print(x[1,1])

print(x[1,1].item())

print("shape of x is", x.shape)

print("ndim of x is", x.ndim)

print("device of x is ", x.device)

print("layout of x is", x.layout)

f = x.pow(2).sum()

print(f)

print("before backward, grad of x is", x.grad)

f.backward()

print("after backwrad, grad of x is", x.grad)

print("grad_fn of x is", x.grad_fn)

print("grad_fn of f is", f.grad_fn)

deep learning dev with Pytorch

深度学习开发流程,先数据预处理,然后做模型训练,最后做模型推理部署。

数据预处理

模型开发

torch.nn 预制了很多函数和神经网络层

- 神经网络layer(有全连接层,卷积,池化,normalized, dropout, 各种非线性激活函数)

- 损失函数

- 优化器

- layer的container.

模型训练

from torch import optim

from torch import nn

# 定义模型

model = LeNet5().to(device)

# 定义损失函数

criterion = nn.CrossEntropyLoss()

# 定义优化器

optimizer = optim.SGD(model.parameters(), lr=0.001, momentum=0.9)

# 模型训练和验证

N_EPOCHS = 10

for epoch in range(N_EPOCHS):

# Training

train_loss = 0.0

model.train()

for inputs, labels in trainloader:

inputs = inputs.to(device)

labels = labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

train_loss += loss.item()

# Validation

val_loss = 0.0

model.eval()

for inputs, labels in valloader:

inputs = inputs.to(device)

labels = labels.to(device)

模型部署

训练好的模型保存到指定的文件,然后在后面使用时,使用load_state_dict重新加载模型

model.train()

# train model

# save model to file

torch.save(model.state_dict(), "./lenet5_model.pt")

# load model

model = LeNet5().to(device)

model.load_state_dict(torch.load("./lenet5_model.pt"))

# use model predict

model.eval()

mini torch

https://minitorch.github.io

Tengine

repo 地址: https://github.com/OAID/Tengine

函数调用主流程

register op

NLP

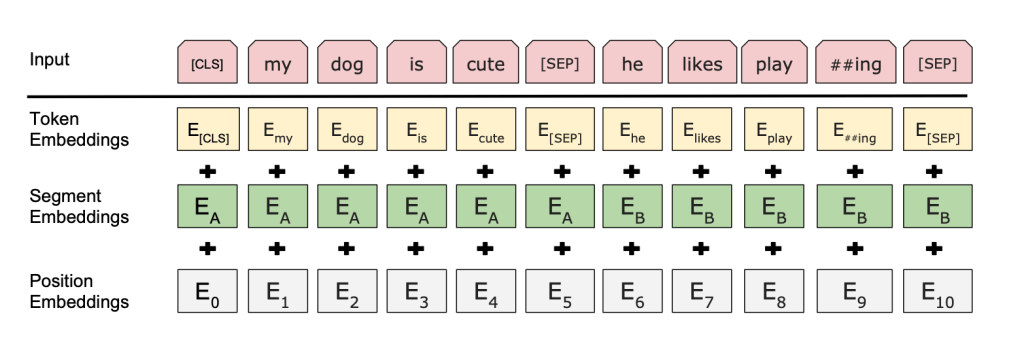

Bert

How to code bert 笔记

https://neptune.ai/blog/how-to-code-bert-using-pytorch-tutorial

The Annotated transformer 笔记

Self-attention, sometimes called intra-attention is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence

symbol representation -> encode -> continuous representations -> decode -> symbol representation

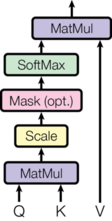

Attention

An attention function can be described as mapping a query and a set of key-value pairs to an output, where the query, keys, values, and output are all vectors. The output is computed as a weighted sum of the values, where the weight assigned to each value is computed by a compatibility function of the query with the corresponding key

he two most commonly used attention functions are additive attention (cite), and dot-product (multiplicative) attention

HuggingFace transformers

huggingface/transformers

Questions: 怎么杨使用已经下载的model?

相关资料:

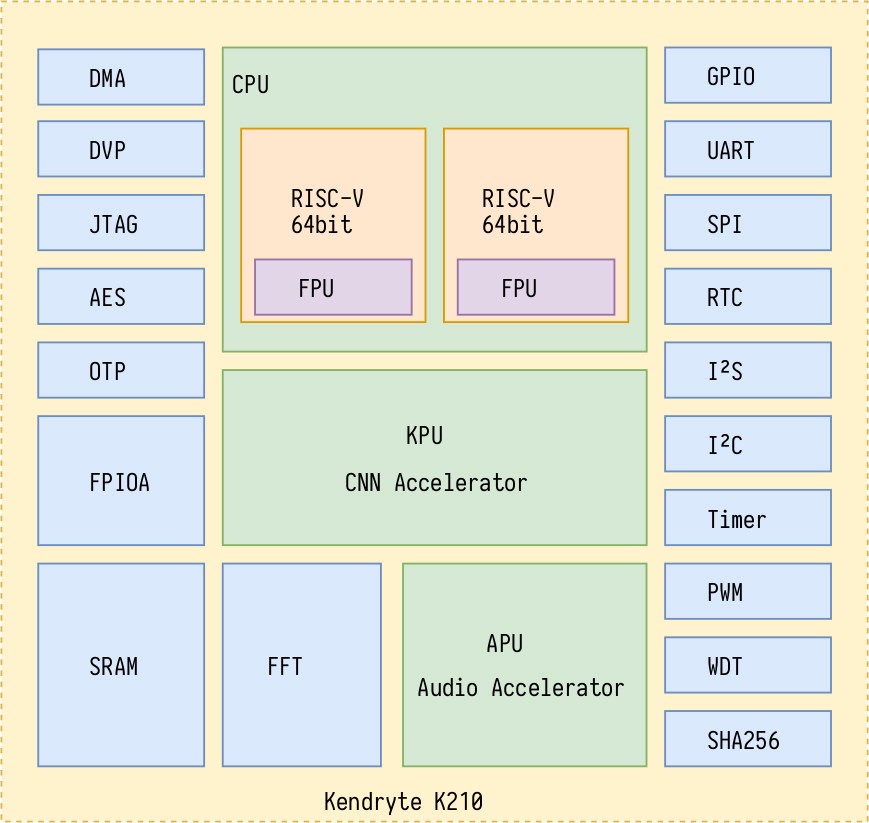

nncase

nncase 是一个为 AI 加速器设计的神经网络编译器。

comile流程

Compile过程

k210的kernels, 为啥看到的都是c++代码?不是应该直接调driver的方法吗?

kpu

riscv-plic-spec:https://github.com/riscv/riscv-plic-spec/blob/master/riscv-plic.adoc

RISC-V Platform-Level Interrupt Controller Specification

facedetect example

kpu

kpu_load_kmodel

kpu_run_kmodel

关键函数为ai_step,是pli中断的callback, 会一层层的执行kmodel

看了kpu.c中的代码,貌似只有conv2d是在kpu上跑的,其他算子的都是c++代码

应该是在cpu上跑的。

dmac

plic

RISC-V Platform-Level Interrupt Controller Specification

全局中断,也就是所说的外部中断,其他外设统统都是外部中断。外部中断连接在Platform-Level Interrupt Controller (PLIC)上。

PLIC需要一个仲裁决定谁先中断,存在个优先级的问题。

k210 Standalone SDK

相关资料文档

- Github地址:https://github.com/kendryte/kendryte-standalone-sdk

- K210 sdk开发文档: 英文pdf, 中文pdf

platform

根据platform.h中的地址定义,地址空间布局如下:

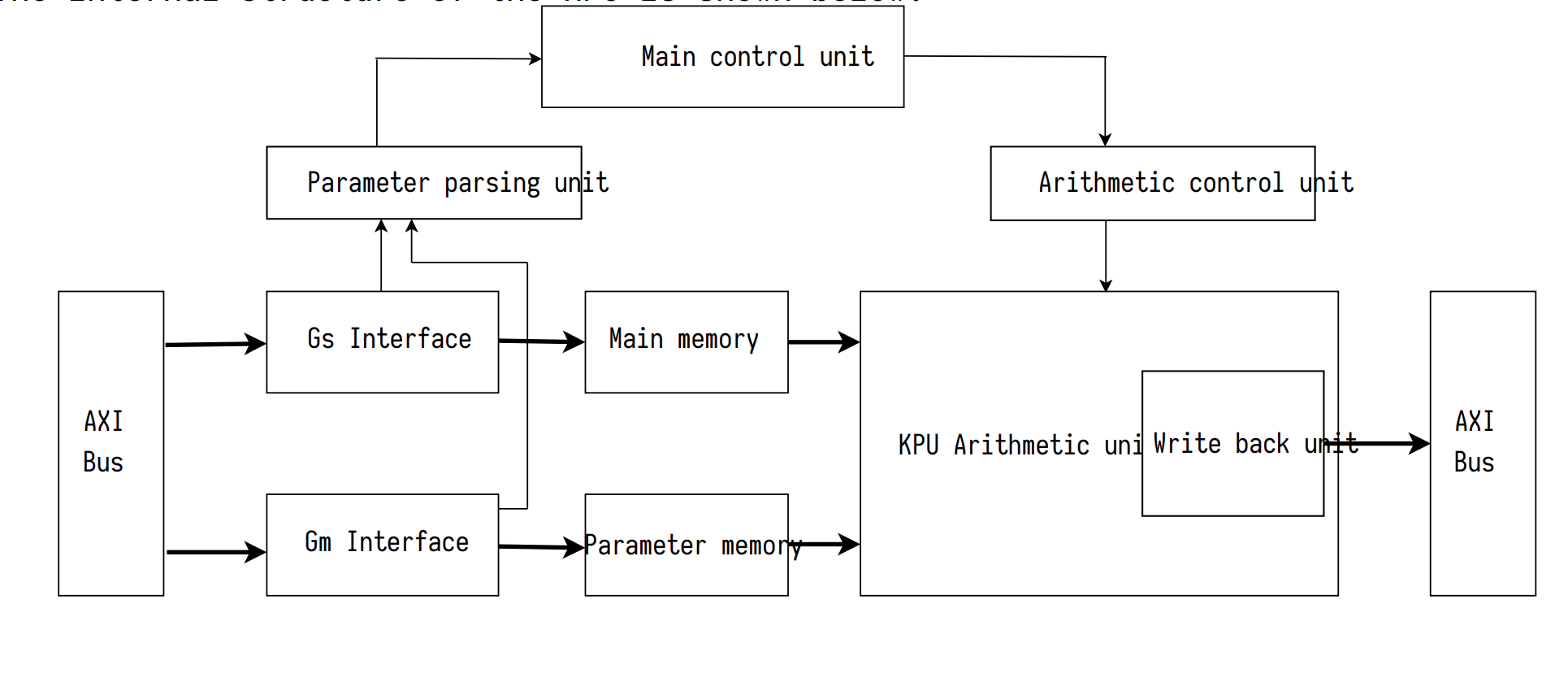

kpu

AXI BUS是啥

你可以理解为一种用于传输数据的模块或者总线。用于两个模块或者多个模块之间相互递数据。反正它有一堆优点。。被SOC广泛采用了。

riscv-plic-spec:https://github.com/riscv/riscv-plic-spec/blob/master/riscv-plic.adoc

RISC-V Platform-Level Interrupt Controller Specification

kpu_load_kmodel

kpu_run_kmodel

关键函数为ai_step,是pli中断的callback, 会一层层的执行kmodel

看了kpu.c中的代码,貌似只有conv2d是在kpu上跑的,其他算子的都是c++代码

应该是在cpu上跑的。

dmac

plic

RISC-V Platform-Level Interrupt Controller Specification

全局中断,也就是所说的外部中断,其他外设统统都是外部中断。外部中断连接在Platform-Level Interrupt Controller (PLIC)上。

PLIC需要一个仲裁决定谁先中断,存在个优先级的问题。

参考文献

face detect example

Ray

相关资料整理

主要组件

- Gcs: Global Control State, 存储了代码,输入参数,返回值

- Raylet:Local Scheduler, Worker通过Raylet和Gcs通信。

- Redis

Ray Paper

- 论文arxiv 地址 ray paper

Ray Remote

Actor 生命周期是怎么管理的。

LocalSchedule task保存在哪里?

dynamic task dag是怎么构建的?

Ray和Dask的区别是什么?

GcsServer

GcsServer Star流程

tensorflow

some notes on reading tensorflow source code

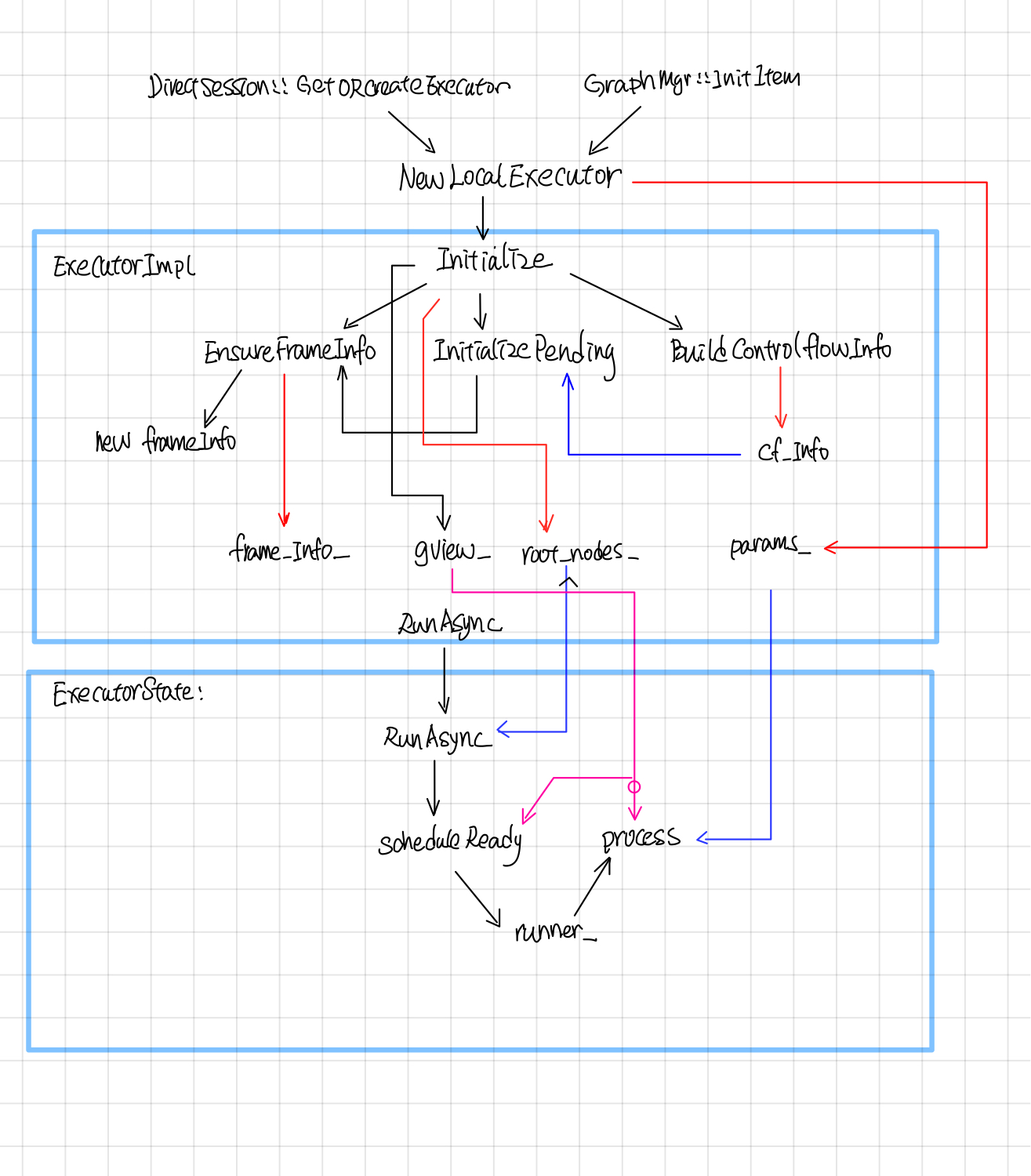

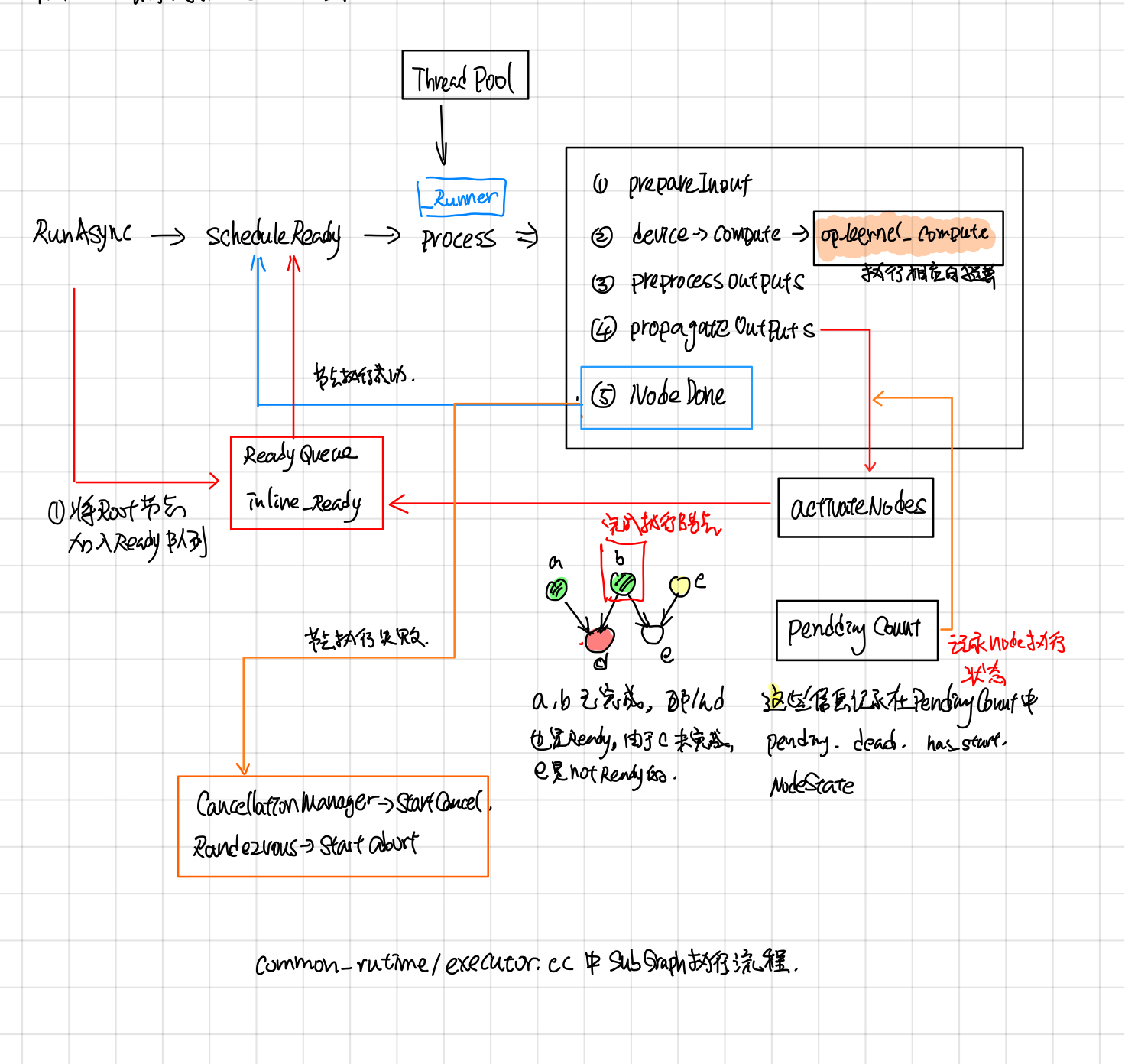

Tensorflow Graph Executor(草稿)

摘要

Tensorflow中单机版的(direct session)会按照device将graph先划分成子图subgraph, 然后每个subgraph会交给一个execturo去执行,分布式的(GrpSession) 首先会将graph按照worker划分,每个worker划分成一个子图,然后注册到每个worker的graph_mgr, 并在graph_mgr中再按照device将worker_subgraph划分成device的subgraph, 最后每个device对应的subgraph会由executor去执行,Tensorflow中的graph执行示意图如下(图片来自tensorflow-talk-debugging)。

本文主要分析了executor在执行graph时,Node的执行调度以及node的输入输出数据, 执行状态是如何保存的,最后结合代码和Tensorflow control flow implemention这部分文档分析了的control flow的具体实现。主要涉及的代码为common_runtime/executor.cc

Executor中主要类

Executor

Executor为基类,对外提供了两个接口Run和RunAsync, 其中Run是对RunAsync简单的一层包装。

// Synchronous wrapper for RunAsync().

Status Run(const Args& args) {

Status ret;

Notification n;

RunAsync(args, [&ret, &n](const Status& s) {

ret = s;

n.Notify();

});

n.WaitForNotification();

return ret;

}

Executor基类只要去实现RunAsync就行。

virtual void RunAsync(const Args& args, DoneCallback done) = 0;

ExecutorImpl

ExecutorImpl继承实现了Executor,它的RunAsync实现转发给了ExecutorState::RunAsync, ExecutorImpl主要的工作是从Graph中解析出一些静态信息,比如FrameInfo, GraphView, 由后面的ExecutorState执行的时候使用。

void ExecutorImpl::RunAsync(const Args& args, DoneCallback done) {

(new ExecutorState(args, this))->RunAsync(std::move(done));

}

ExecutorState

Executor中的调用关系

ExecutorImpl call flow

Executor被调用的入口为NewLocalExecutor, 在DirectSesion中会为每个subgraph创建一个executor, 然后交给ExecutorBarrier同时执行多个Executor。NewLocalExecutor在ExecutorImpl成员函数中的调用过程如下:

Exector::RunAsync这个会被转发给ExecutorState::RunAsync(这个函数的执行逻辑见下文)

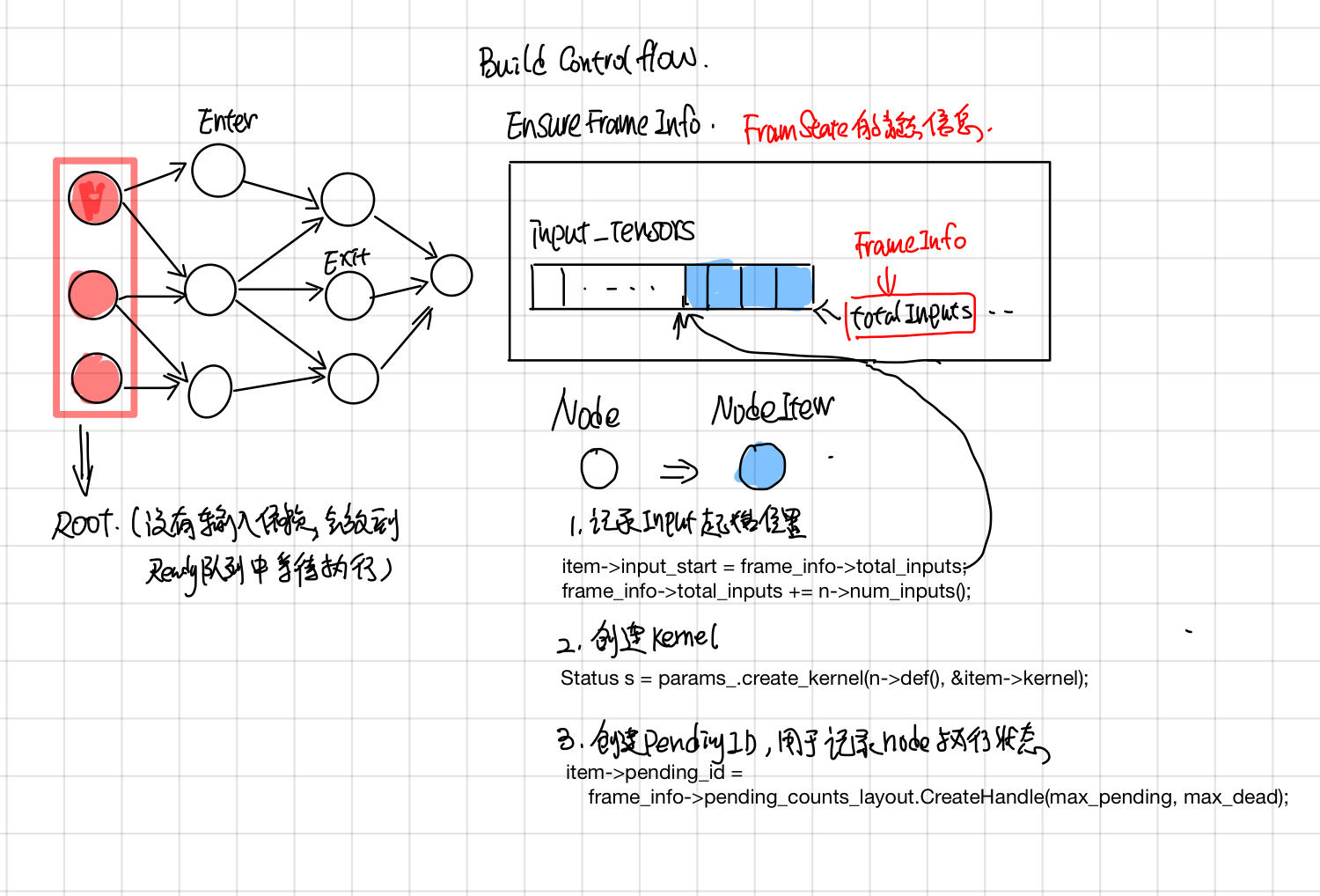

ExecutorImpl::Initialize

在ExecutorImpl::Initialize中,对于graph中的每个node, 创建对应的NodeItem, 主要包含了三块:

- 调用params.create_kernal, 创建nodeItem->kernal.

- 记录nodeItem.input_start, input_start 是该node在它所属frame的input_tensors中的偏移index, 这个在后面的ProcessInputs和ProcessOutputs中会用到。

- 创建node对应的pending_id, pending_id用于找到记录它执行状态的pendingCount, 这个在后面的ActiveNode中会用到.

在BuildCtronlFlow中会建立好framename之间的父子关系, frameInfo是frame的静态信息(对应着执行时候的FrameState动态信息),并且建立了从node id找到node所属frame name的映射关系,包含了frame中的total inputs, 这个frame所包含的node.

ExecutorState::RunAsync

ExecutorImpl::Process

Control Flow

后来在[1]中发现节点还有Switch, Merge, IterNext, Enter, Exit 五个flow control node,用来实现while循环,为此tensorflowe引入了frame的概念,可以粗略的认为和函数调用一样吧, 在遇到Enter node的时候,就新建一个child frame,把inputs(类似于函数函数调用时候参数入栈)一样,forward到child frame中,在遇到Exit node,就把输出放到parent frame 中(类似于将函数的return值入栈)。

未完待续

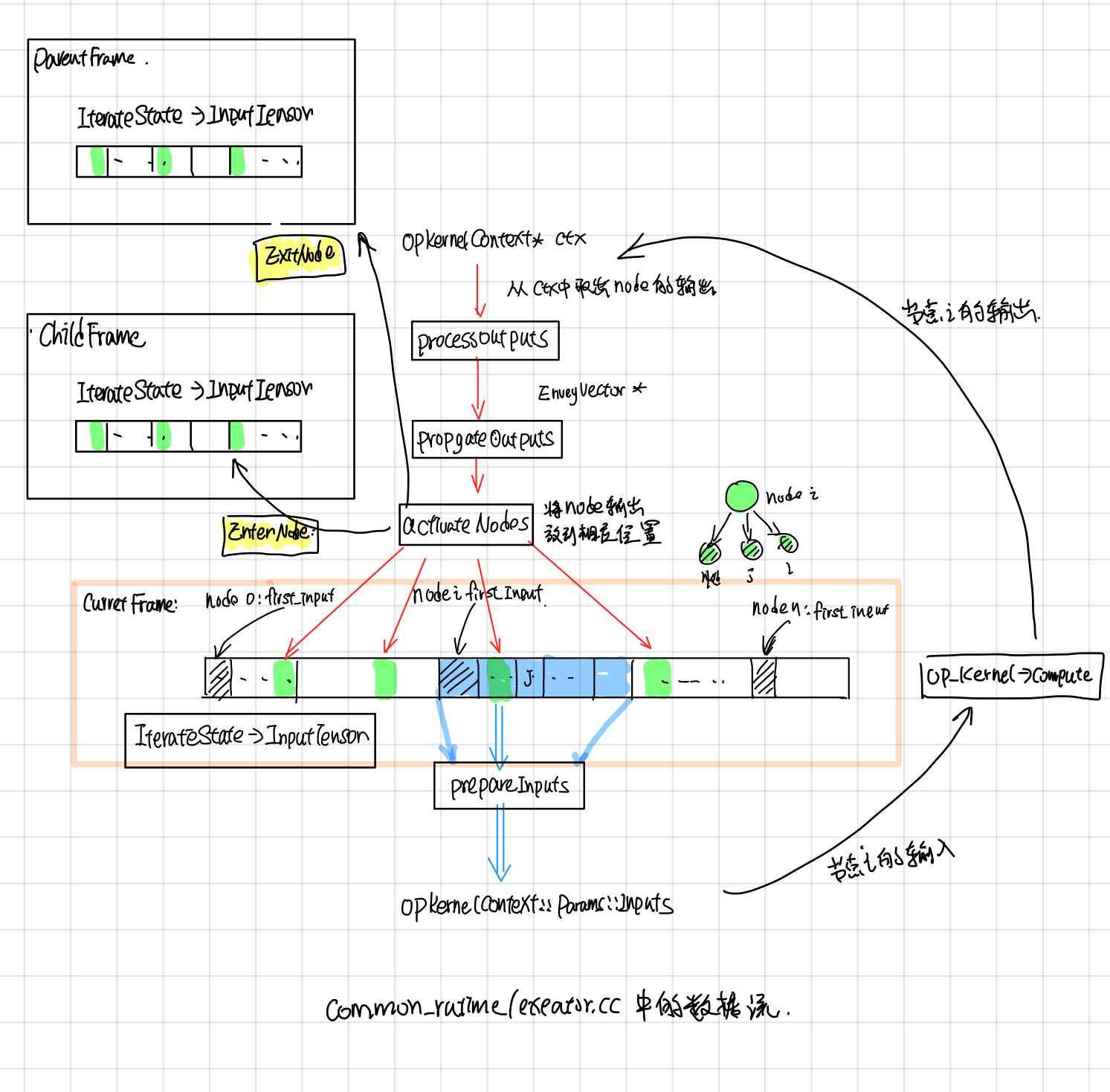

Executor中数据流程

参考

- [Tensorflow control flow implemention]

- tensorflow-talk-debugging

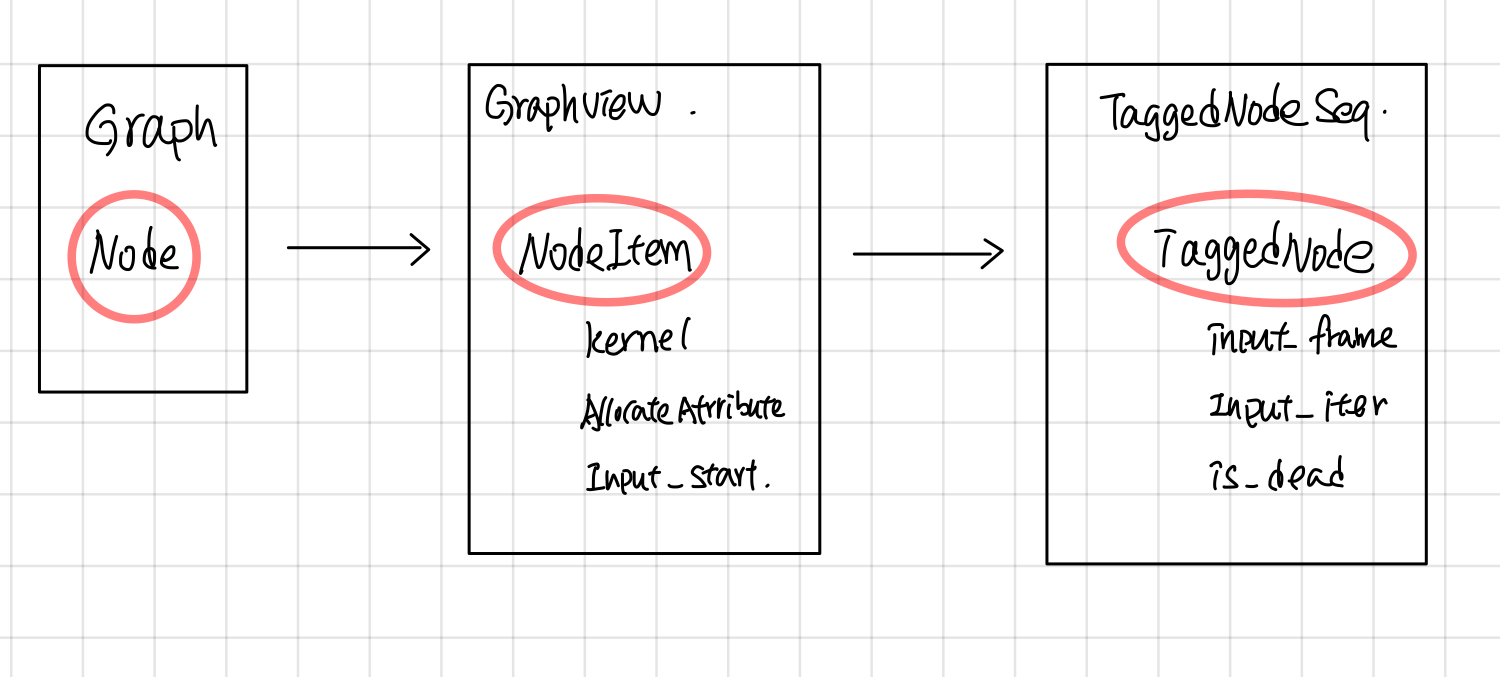

Sub Graph 预处理: Node => NodeItem => TaggedNode (Draft)

引言

下图是一个graph中每个node被处理的过程,首先在ExecutorImpl::Initialize的时候,将node 处理成NodeItem,创建node对应的kernal, 然后在node ready可执行的时候,会创建一个TaggedNode(TaggedNode主要多了个frame指针,记录了当前执行的frame), 并将它放入Ready队列中,最后交给ExecutorState::Process去执行这个Node。

NodeItem

NodeItem的主要作用是将Graph中每个node的op,转换成可以在device上执行的kernal, 另一方面,记录该node输入tensor的位置,并且使用PendingCount来记录Node的执行状态。 Gview可以看成是NodeItem的容器,根据node的id就可以找到相应的NodeItem, 对于graph中的每个node, 在ExecutorImpl::Initialize中都会创建一个NodeItem,放到Gview中。

NodeItem 主要包含的字段

- kernel: 由params.create_kernel创建,kernel是在device上执行的主要对象,kerenl 并将在ExecutorImpl的析构函数被params.delete_kernel删除。

// The kernel for this node.

OpKernel* kernel = nullptr;

- input_start:纪录了在当前IteratorState的input_tensors中开始的index。这个node的输入为:input_tensors[input_start: input_start + num_inputs]这部分对应的Tensors。

// Cached values of node->num_inputs() and node->num_outputs(), to

// avoid levels of indirection.

int num_inputs;

int num_outputs;

// ExecutorImpl::tensors_[input_start] is the 1st positional input

// for this node.

int input_start = 0;

// Number of output edges.

size_t num_output_edges;

- pending_id: 根据这个id在当前的IteratorState中找到对应的PendingCount,从而找到这个nodeItem的执行状态。

PendingCounts::Handle pending_id;

- expensive/async kernel: 标志表明kernel是否是Async的和expensive的。

bool kernel_is_expensive : 1; // True iff kernel->IsExpensive()

bool kernel_is_async : 1; // True iff kernel->AsAsync() != nullptr

- control node ,标志该node是否是Control flow node, 以及类型

bool is_merge : 1; // True iff IsMerge(node)

bool is_enter : 1; // True iff IsEnter(node)

bool is_exit : 1; // True iff IsExit(node)

bool is_control_trigger : 1; // True iff IsControlTrigger(node)

bool is_sink : 1; // True iff IsSink(node)

// True iff IsEnter(node) || IsExit(node) || IsNextIteration(node)

bool is_enter_exit_or_next_iter : 1;

- allocate attribute: 影响device所返回的allocator,从而影响kernal执行时候,申请内存时候的处理行为。

// Return array of per-output allocator attributes.

const AllocatorAttributes* output_attrs() const { return output_attr_base(); }

InferAllocAttr主要根据device, send, recv等节点, 来设置是否是gpu_compatible的,

attr->set_nic_compatible(true);

attr->set_gpu_compatible(true);

其中AllocatorAttributes主要影响GpuDevice所返回的allocator上。

//common_runtime/gpu/gpu_device_factory.cc

Allocator* GetAllocator(AllocatorAttributes attr) override {

if (attr.on_host()) {

if (attr.gpu_compatible() || force_gpu_compatible_) {

ProcessState* ps = ProcessState::singleton();

return ps->GetCUDAHostAllocator(0);

} else {

return cpu_allocator_;

}

} else {

return gpu_allocator_;

}

}

TaggedNode

TaggedNode 增加了了一个FrameState指针,指向了Node将要执行的FrameState, input_iter, input_frame加上input_iter可以确定了

struct TaggedNode {

const Node* node = nullptr;

FrameState* input_frame = nullptr;

int64 input_iter = -1;

bool is_dead = false;

TaggedNode(const Node* t_node, FrameState* in_frame, int64 in_iter,

bool dead) {

node = t_node;

input_frame = in_frame;

input_iter = in_iter;

is_dead = dead;

}

在node处于ready 可执行状态的时候,会创建一个TaggedNode, 并放到TaggedNodeSeq队列中,等待调度执行。

ExecutorState::FrameState::ActivateNodes ==>

ready->push_back(TaggedNode(dst_item->node, this, iter, dst_dead));

ExecutorState::RunAsync ==>

for (const Node* n : impl_->root_nodes_) {

DCHECK_EQ(n->in_edges().size(), 0);

ready.push_back(TaggedNode{n, root_frame_, 0, false});

}

获取node输入tensors指针

首先根据TaggedNode中的input_frame,input_iter获取node的输入tensors

Entry* GetInputTensors(FrameState* input_frame,

int64 input_iter) const NO_THREAD_SAFETY_ANALYSIS {

return input_frame->GetIteration(input_iter)->input_tensors;

}

然后根据NodeItem中定义的input_start获取first_input tensor的指针

//在ExecutorState::Process中:

Entry* input_tensors = GetInputTensors(input_frame, input_iter);

Entry* first_input = input_tensors + item.input_start;

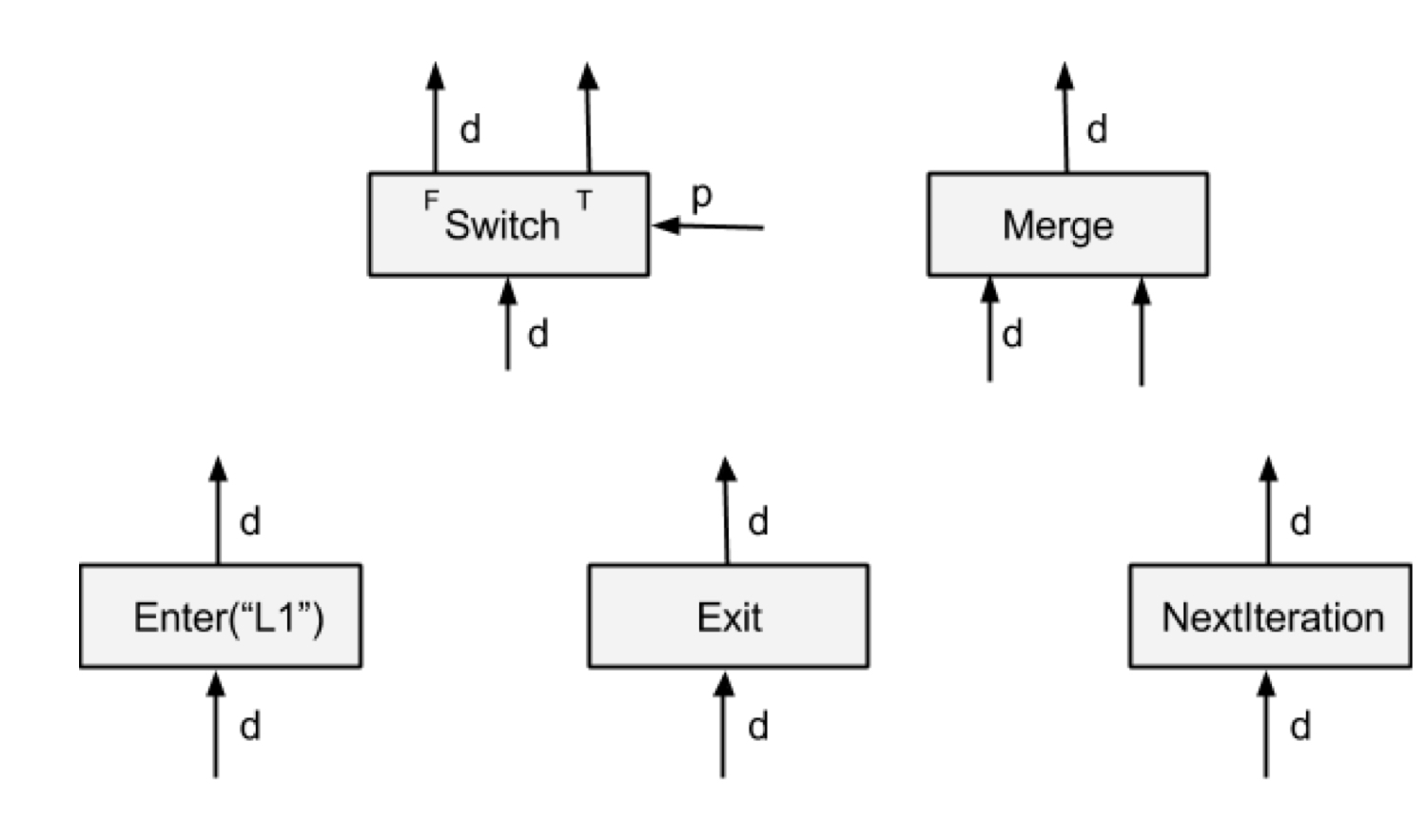

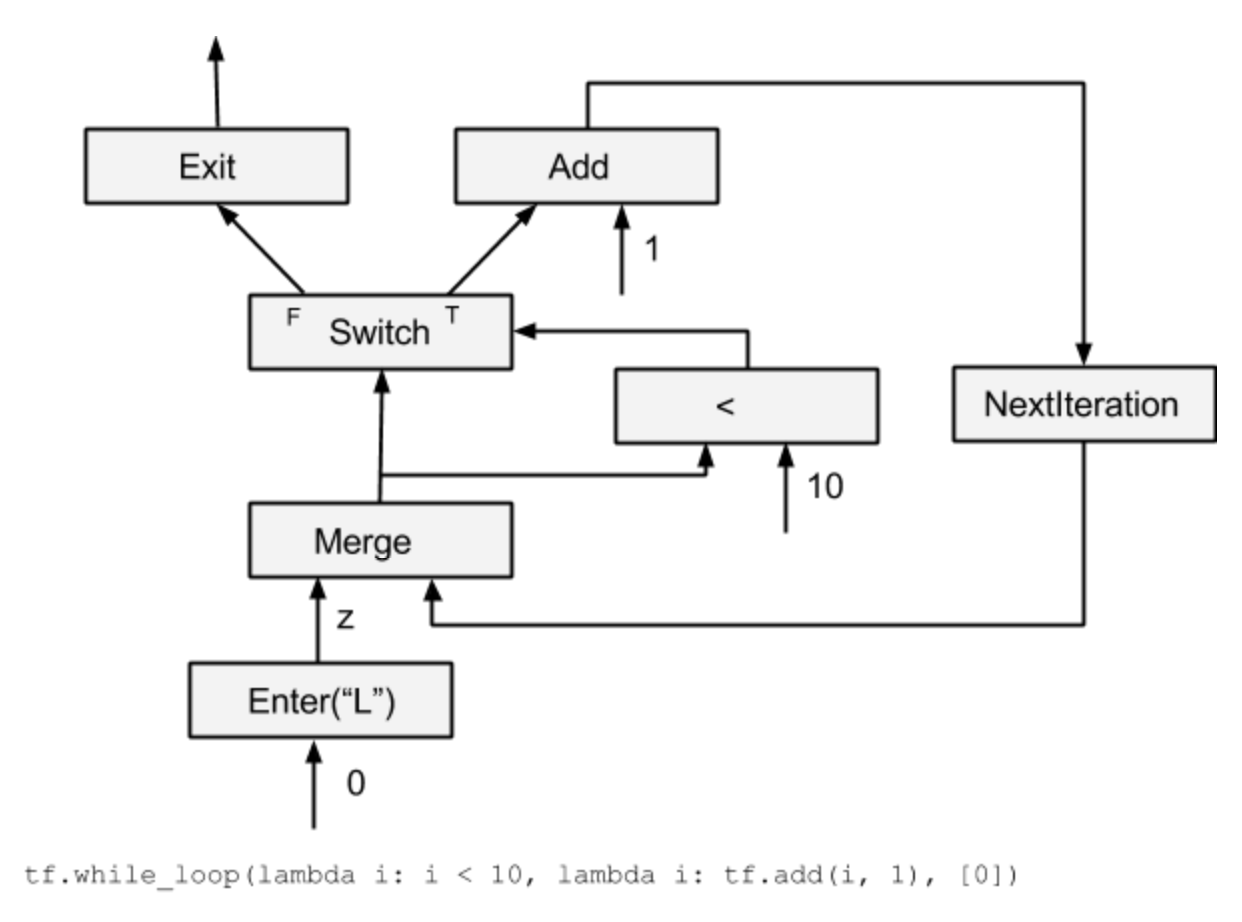

Flow Control op

在Tensorflow中,graph中每个node的op,都在一个execution Frame中执行,Enter/Exit分别负责execution Frame的创建和删除,如果把execution frame和函数调用做类比的话,那么Enter有点类似于传参,而Exit则类似于return 返回值。 而switch/merge/nextIteration 则用于实现类似于while/if之类的分支跳转和循环。本节主要参照 1 这篇文章。

flow control op

Tensorflow中control flow op对应具体定义如下

switch

A Switch operator forwards the input tensor d to one of its outputs depending on the boolean tensor of the control input p. A Switch is enabled for execution when both its inputs are available.

Switch 根据predict将输入tensor导出到相应的true/false输出。没获得输出的分支会被标记为dead状态(有点类似于if/else中没被执行到的代码), 这个dead状态会往下传播。

Merge

A Merge operator forwards one of its available inputs to its output. A Merge is enabled for execution when any of its inputs is available. It is unspecified which available input it outputs if there are multiple inputs available.

Merge 将输入tensor中的一个导出到输出(先到先得),一般配合switch用

Enter

An Enter operator forwards its input to the execution frame that is uniquely identified by the given name. This Enter op is used to pass a tensor in one execution frame to a child execution frame. There can be multiple Enter ops to the same child execution frame, each making a tensor available (asynchronously) in that child execution frame. An Enter is enabled for execution when its input is available. A new execution frame is instantiated in the TensorFlow runtime when the first Enter op to that frame is executed

Enter node将输入tensor导入到一个frame中。frame name是唯一的,可以根据frame name来找到对应的frame, 在执行的时候,如果frame不存在的话,Enter会创建相应的子frame, Enter node所在的frame是该frame的parent frame.

Exit

An Exit operator forwards a value from an execution frame to its parent execution frame. This Exit op is used to return a tensor computed in a child execution frame back to its parent frame. There can be multiple Exit ops to the parent frame, each asynchronously passing a tensor back to the parent frame. An Exit is enabled when its input is available.

Exit node 从Frame中导出一个tensor到parent frame中。

NextIteration

A NextIteration operator forwards its input to the next iteration in the current execution frame. The TensorFlow runtime keeps track of iterations in an execution frame. Any op executed in an execution frame has a unique iteration id, which allows us to uniquely identify different invocations of the same op in an iterative computation. Note that there can be multiple NextIteration ops in an execution frame. The TensorFlow runtime starts iteration N+1 when the first NextIteration op is executed at iteration N. As more tensors enter an iteration by executing NextIteration ops, more ops in that iteration will be ready for execution. A NextIteration is enabled when its input is available.

NextIteration将输入导出到下个iteration, NextIteration导出的应该是循环变量,比如下面代码中的j和sum

for(int j=0, sum=0; j < 100;){

int tmp = i + 1;

j * = 2

sum += j;

}

While loop

可以通过上述的五个flow control node来实现tensorflow中的while loop

tf.while_loop(lambda i: i < 10, lambda i: tf.add(i, 1), [0])

可以看到NextIteration导入导出的是循环变量i,merge node可以用来初始化变量, 类似于 i= i || 0的效果, switch控制是否结束循环,Exit跳出循环。

在文献1中还讲述了dead传播,分布式的whileloop,以及while loop对应的gradient op.讲的比较深,后面再补上吧。

参考文献:

Executor Frame

引言

在Executor 执行Graph的时候,会首先分析Graph, 创建关于Graph中frame的静态信息,比如ControlFlowInfo和FrameInfo,对于graph中的每个node, 可以根据ControlFlowInfo去得到它对应的frame_name, 然后根据frame_name可以得到FrameInfo的一些信息。

而FrameState和IterationState这两个是动态的状态,由Executor在执行Graph时候动态创建的。FrameState对应着整个while loop,而IterationState则对应着while loop中的某个迭代。 FrameState中包了total_input(frame中所有node input个数等信息),IterationState中有个EntryVec用于保存某次迭代时候,node之间输入输出的Entry。

本文主要分析了Executor中ControlFlowInfo, FrameInfo, FrameState, IterationState,这几个和Executor Frame相关的struct, 以及它们之间的关系。

ExecutorImpl::ControlFlowInfo

ControlFlowInfo里面unique_frame_names保存了computation graph中所有frame的名字,frame_names则是个倒查表,索引对应于node->id, 可以根据frame_names[node->id()]找到node对应的frame_name.

struct ControlFlowInfo {

gtl::FlatSet<string> unique_frame_names;

std::vector<string> frame_names;

};

ControlFlowInfo的创建

BuildControlFlowInfo 会遍历整个graph, 然后处理Enter/Exit node, 填充好ControlFlowInfo中的字段,

- 如果遇到Enter node, 则进入子Frame, Enter node的每个输出node对应的frame_name都是EnterNode对应的 "frame_node"属性

//Enter node包含了frame_name 属性,

GetNodeAttr(curr_node->attrs(), "frame_name", &frame_name));

- 如果是Exit node, 则退出子Frame, Exit node的每个输出node对应的frame_name都是Exit node parent node的 frame_name

//other code

else if (IsExit(curr_node)) {

parent = parent_nodes[curr_id];

frame_name = cf_info->frame_names[parent->id()];

parent = parent_nodes[parent->id()];

}

- 如果是其他类型的node, 则node的每个输出node frame和当前node一致

parent = parent_nodes[curr_id];

frame_name = cf_info->frame_names[curr_id];

controlflow info被用到的地方

在executor中首先会根据node->id找到frame_name, 然后根据frame_name找到对应的FrameInfo

const string& frame_name = cf_info.frame_names[id];

FrameInfo* frame_info = EnsureFrameInfo(frame_name);

ExecutorImpl::FrameInfo

FrameInfo包含的主要字段如下:

// The total number of inputs to a frame.

int input_count;

int total_inputs;

PendingCounts::Layout pending_counts_layout;

PendingCounts* pending_counts; // Owned

input_count

input_count 代表graph中Enter到该frame的Enter Node个数, 统计个数的代码如下:

//ExecutorImpl::Initialize

for (const Node* n : graph_->nodes()) {

//other code..

if (IsEnter(n)) {

string enter_name;

TF_RETURN_IF_ERROR(GetNodeAttr(n->attrs(), "frame_name", &enter_name));

EnsureFrameInfo(enter_name)->input_count++;

}

}

total_inputs

total_inputs会在ExecutorState::IteratorState中用到,它的值为frame中所有node的inputs个数的总和。

// The total number of input tensors of a frame.

// == sum(nodes[*].num_inputs()) where nodes are the nodes in the frame.

int total_inputs;

total_inputs在后面的影响如下:

FrameInfo.total_inputs ==> FrameState.total_input_tensors ==> IterationsState.input_tensors(new Entry[total_input_tensors])

PendingCounts

- PendingCounts相关,pending_counts_layout在后面会用来创建Node的PendingCount, pending count会用来跟踪Node的状态(比如是否所有的input都已ready, Node是否已经执行过了,Node是否在Dead path),

struct FrameInfo由EnsureFrameInfo这个函数lazy创建,并在Intialize填充好它的字段。

FrameInfo* EnsureFrameInfo(const string& fname) {

auto slot = &frame_info_[fname];

if (*slot == nullptr) {

*slot = new FrameInfo;

}

return *slot;

}

FrameInfo将在ExecutorImpl的析构函数中被删掉。

~ExecutorImpl() override {

//other code

for (auto fiter : frame_info_) {

delete fiter.second;

}

ExecutorState::FrameState

前面两个ControlFlowInfo/FrameInfo都是静态的信息(所以叫XXXInfo),而FrameState和IterationState都是动态信息,会在Graph执行的时候动态创建。

创建FrameState: FindOrCreateChildFrame

在FindOrCreateChildFrame中,会调用InitializeFrameInfo从FrameInfo中抽取有用的字段

void InitializeFrameInfo(const string& enter_name) {

auto it_frame_info = executor->frame_info_.find(enter_name);

DCHECK(it_frame_info != executor->frame_info_.end());

ExecutorImpl::FrameInfo* finfo = it_frame_info->second;

pending_counts = finfo->pending_counts;

total_input_tensors = finfo->total_inputs;

num_pending_inputs = finfo->input_count;

nodes = finfo->nodes;

}

FindOrCreateChildFrame被调用的stack

Process -> PropagationOutputs -> FindOrCreateChildFrame

删除FrameState: DeleteFrame

1.在PropgateOutputs中,如果is_frame_done,就会调用DeleteFrame, DeleteFrame会向parent frame传播dead_exits(TODO: 这部分描述细化)

IterationState删除的地方

- CleanupFrameIterations

- frame->CleanupIterations

ExecutorState::IterationState

Entry* input_tensors;

// The number of outstanding ops for each iteration.

size_t outstanding_ops;

int outstanding_frame_count;

PendingCounts counts_;

FrameState和IterationState创建地方:

-

在ExecutorState的构造函数中会创建一个FrameState作为rootframe, 同时也会创建该frameState的第一个IterationState。

-

在执行完一个Node之后,PropagateOutputs在遇到Enter节点的时候,会调用FindOrCreateChildFrame来创建一个新的FrameState,以及该FrameState的第一个IterationState

-

在PropgateOutputs的时候,遇到NextIteration Node 会去调用FrameState::IncreatementIteration新增一个IterationState

-

所有的framesate都放在了outstanding_frames 这个map中,新建的framestate会插到这个map中,删除的时候会从这个map中去掉。

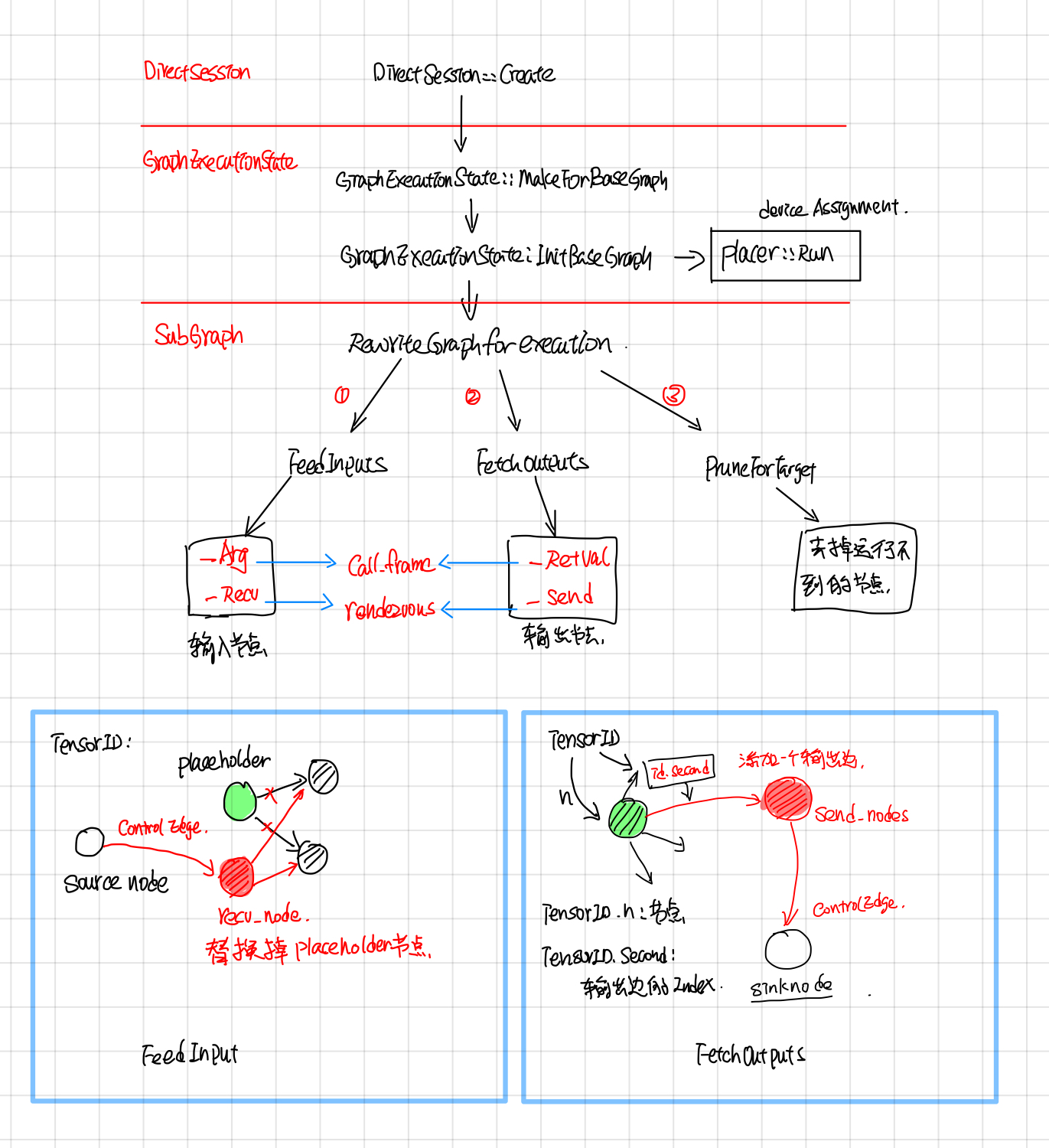

Tensorflow Direct Session (Draft)

摘要

本文主要分析了tensorflow 中DirectSession部分的代码。如果把executor 执行graph当成一个函数的话,那么Tensorflow中Session主要功能是把用户传过来的一些参数Feeds到compute graph中,然后运行到graph target node,最后在graph computation完成之后,取出用户指定名字的一些tensor。

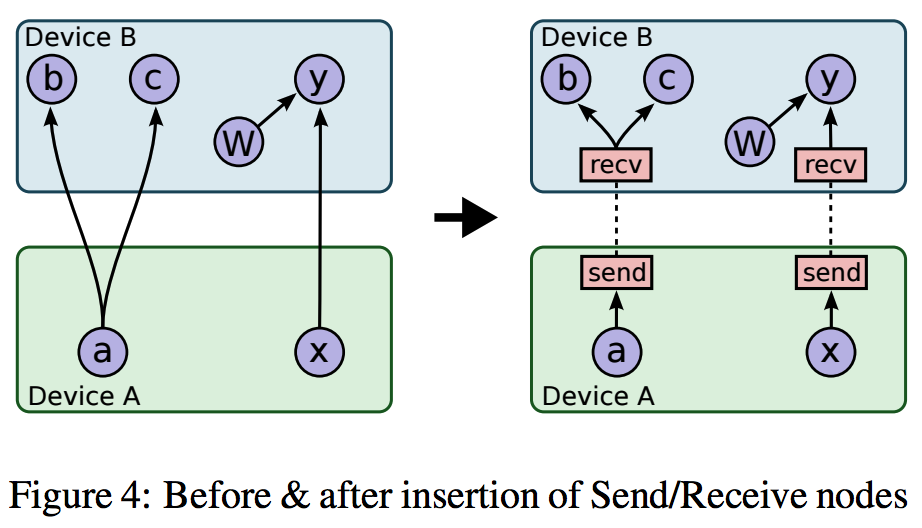

DirectSession 则主要工作以下几方面:

- Rewrite Graph: 将FeedInputs和FetchOutputs节点加到graph中,然后去掉graph中运行不到的节点,最后采用并查集的方式,给graph中每个node分配一个device。

- Graph partition:根据每个node所device,将node划分成不同的subgraph, subgraph之间添加send和recv节点做不同device之间的通信。

- CeateExecutors:每个device的subgraph会创建一个Executor来执行graph computation。

- Fetch outputs:对于DirectSession来说,FeedInputs和FetchOutputs 所添加的节点是

_Arg和_RetVal,这两个节点会通过directSession的callframe来读写input,output。

RewriteGraph

RewriteGraph这块的callstack如下图所示,主要主要涉及到 GraphExecutionState, SubGraph, Placer这三块。

GraphExecutionState据文档所说(graph_execution_state.h),其主要作用是按照BuildGraphOptions选项将Graph转换成可执行的Client Graph。

GraphExecutionState is responsible for generating an executable ClientGraph from the original GraphDef that specifies the complete graph and from BuildGraphOptions which specifies input/output nodes.

ClientGraph与GraphDef的区别是: ClientGraph中每个node都被Assign了某个Device,这部分由Placer完成;另外添加了input/output nodes, 去掉了执行不到的node, 这部分由subgraph完成。

An executable Graph differs from a GraphDef by being Placed, meaning that each Node is assigned to a single Device in the available set.

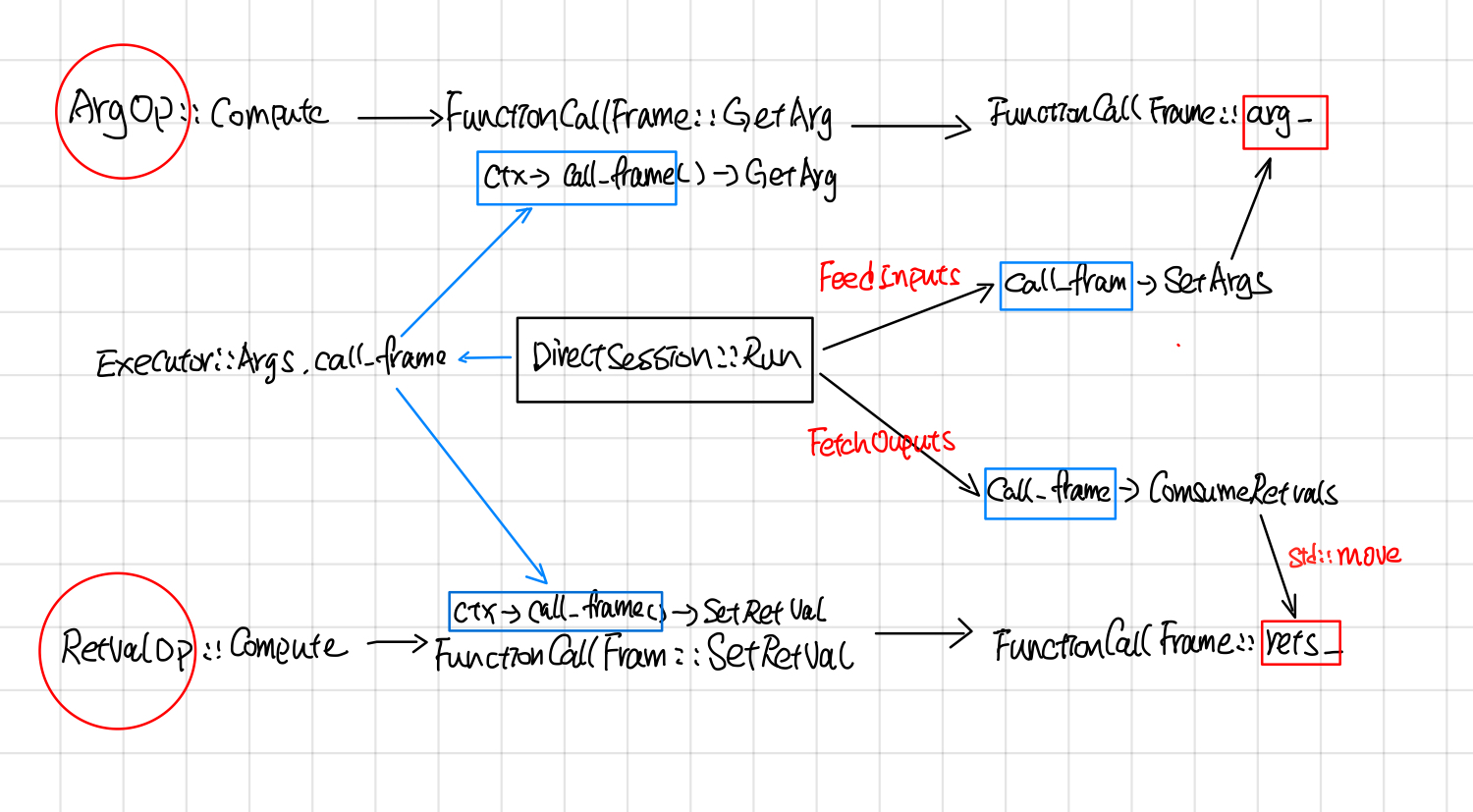

Call frame: feed and fetch

DirectSession中采用了call frame的方式读写compution graph中的inputs/outputs

DirectSession::Run的时候,首先会创建一个FunctionCallFrame, 把要feed的tensor填充到FunctionCallFrame::args_。

// In DirectSession::Run

FunctionCallFrame call_frame(executors_and_keys->input_types,

executors_and_keys->output_types);

gtl::InlinedVector<Tensor, 4> feed_args(inputs.size());

for (const auto& it : inputs) {

if (it.second.dtype() == DT_RESOURCE) {

Tensor tensor_from_handle;

TF_RETURN_IF_ERROR(

ResourceHandleToInputTensor(it.second, &tensor_from_handle));

feed_args[executors_and_keys->input_name_to_index[it.first]] =

tensor_from_handle;

} else {

feed_args[executors_and_keys->input_name_to_index[it.first]] = it.second;

}

}

const Status s = call_frame.SetArgs(feed_args);

在创建Executor的时候,通过Executor::Args.call_frame把call_frame放到OpkernalContext中。

//## DirectSessioin::Runinternal

Executor::Args args;

args.step_id = step_id;

args.call_frame = call_frame;

//other code...

//每个device subgraph对应一个item, item.executor为这个subgraph的exeuctor.

item.executor->RunAsync(args, barrier->Get());

//## ExecutorState::Process

OpKernelContext::Params params;

params.step_id = step_id_;

params.call_frame = call_frame_;

//other code ...

// Synchronous computes.

OpKernelContext ctx(¶ms, item.num_outputs);

nodestats::SetOpStart(stats);

device->Compute(CHECK_NOTNULL(op_kernel), &ctx);

当所有的subgraph Executor执行完毕后,通过FunctionCallFrame::ConsumeRetVals的方式把输出的tensor取出来。

// DirectSession::Run

if (outputs) {

std::vector<Tensor> sorted_outputs;

const Status s = call_frame.ConsumeRetvals(&sorted_outputs);

if (errors::IsInternal(s)) {

//other code

Device placer

Placer 在初始的时候,用户会指定某些节点的device, 比如有的节点是gpu:0, 有的cpu:0, 有的node是gpu:1, 然后将有相同class_属性@loc:xxx的node节点放到一个集合里面,随后根据以下约束, 采用并查集的方式,对node集合进行进一步的划分:

- 用户指定了device,就将node放到用户指定的device上

- Generateo node 和output node放到同一个device上

- Meta node(比如cast操作) 和input node放到同一个device上

- Reftype 的Input, input和output节点尽量放到同一个device上

- 采用并查集的方式将node place给device

- 对于stateful的node, 不改变它的device assign。

stateful node 在placed之后,就不能移到别的device上了, 对于这种node,GraphExecutionState的做法是在placer run之前将stateful node的device assign保存以下,在placer run 之后再恢复回去。

Map of placed stateful nodes, i.e. nodes for which is_stateful() is true, such as "params" and "queue" nodes. Once placed these nodes can not be moved to a different device. Maps node names to device names.

可以通过打开log_device_placement的方式让placer在stderr中把node的device place情况打出来:

config=tf.ConfigProto(log_device_placement=True)

sess = tf.Session(config=config)

Graph partition

Graph partition根据上面Placemnet的结果,将graph partition成不同的子图,子图之间添加send 和recv节点,send和recv节点会用rendzvous来传送tensor。有时候除了send和recv node还需要添加一些control flow node。

(这个地方需要看下tf implement那个文档,了解下具体情况)

Executor Cache

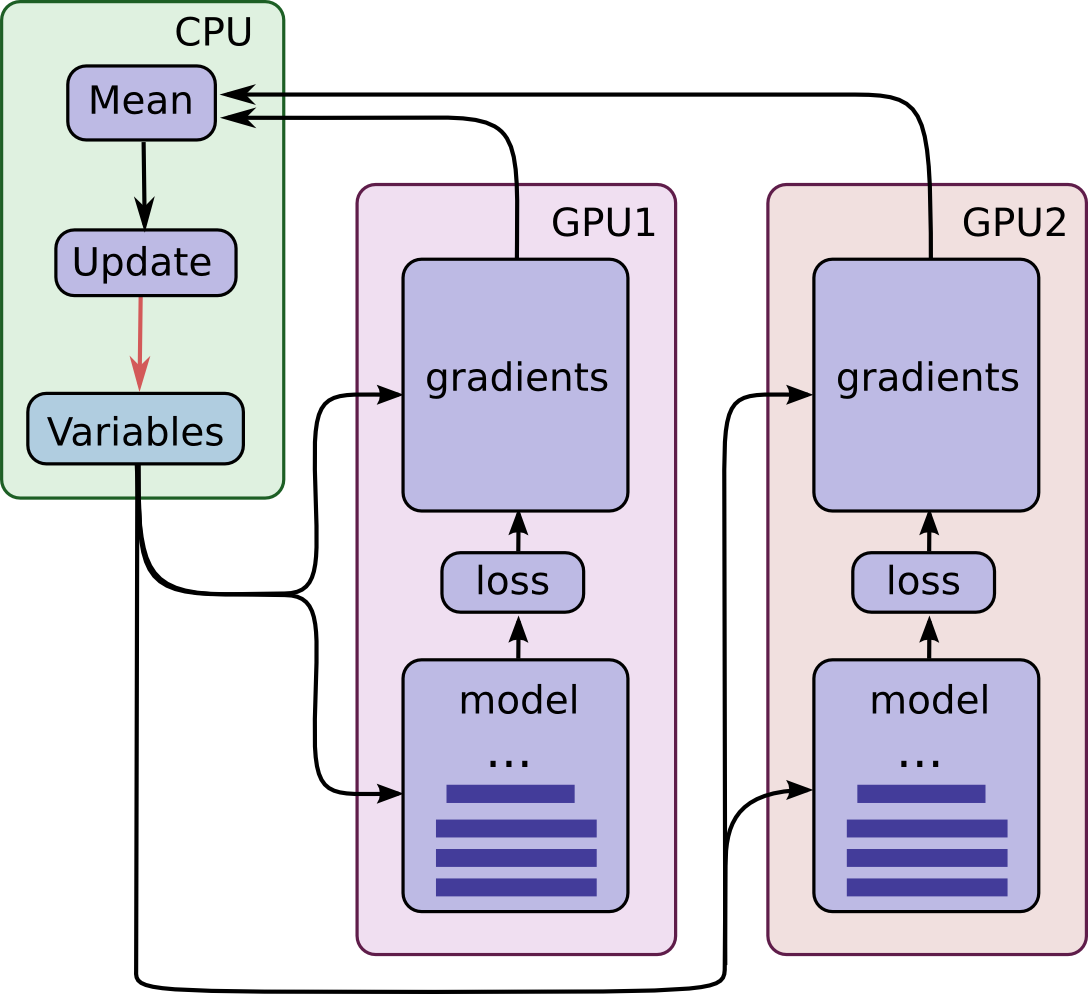

提交给DirectSessoin在经过Graph Partition之后,会划分成不同的子图,比如下图将一个大的graph划分成了3个subgraph分别放置在了在CPU, GPU1, GPU2上,device之间通过rendezvous来通信,每个subgraph都会创建一个executor去执行。

在模型的训练通常会多次迭代run, 因此要加一层cache避免多次做graph的parition,多次创建executor。

with tf.Session(config=config) as sess:

sess.run([merge, gd_step], feed_dict={x: batch_xs, y_label: batch_ys})

cache的key为input, output,target tensor的names 连起来的。还有一个key是吧input, output, target的names分别sort之后再连起来。

DirectSession::Run中cache的key很有意思,有两个key, 首先去是未排序的,另外一个是排序的。未排序的为了快速查找,而排序的key是为了避免由于input_names中names顺序不一样导致cache miss。

// Fast lookup path, no sorting.

// Fast查询的key, 没排序

const string key = strings::StrCat(

str_util::Join(inputs, ","), "->", str_util::Join(outputs, ","), "/",

str_util::Join(target_nodes, ","), "/", run_state_args->is_partial_run,

"/", debug_tensor_watches_summary);

// 将names分别排序然后concat起来.

std::vector<string> inputs_sorted(inputs.begin(), inputs.end());

std::sort(inputs_sorted.begin(), inputs_sorted.end());

std::vector<string> outputs_sorted(outputs.begin(), outputs.end());

std::sort(outputs_sorted.begin(), outputs_sorted.end());

std::vector<string> tn_sorted(target_nodes.begin(), target_nodes.end());

std::sort(tn_sorted.begin(), tn_sorted.end());

const string sorted_key = strings::StrCat(

str_util::Join(inputs_sorted, ","), "->",

str_util::Join(outputs_sorted, ","), "/", str_util::Join(tn_sorted, ","),

"/", run_state_args->is_partial_run, "/", debug_tensor_watches_summary);

// Set the handle, if its needed to log memory or for partial run.

Tensorflow Rendezvous

摘要

Rendezvous负责在Send和Recv node之间传递tensor, tensor的传递可能会跨设备(cross device), 也可能跨主机(GRPC,MPI,Rdam)等。如何提供统一简洁的接口,并同时实现不同场景下tensor高效传递是关键,Rendezvous功能上主要涉及以下两点:

- Send操作不会被block,而Recv操作可能会block,一直等到有tensor,才会返回或者调用异步的callback。

- 由于send 和recv node可能在同一个worker的不同device上,也有可能在不同worker的不同device上,所以Rendezvous又分为LocalRendezvous, IntraProcessRendezvous, RemoteRendezvous 以对应不同的场景。

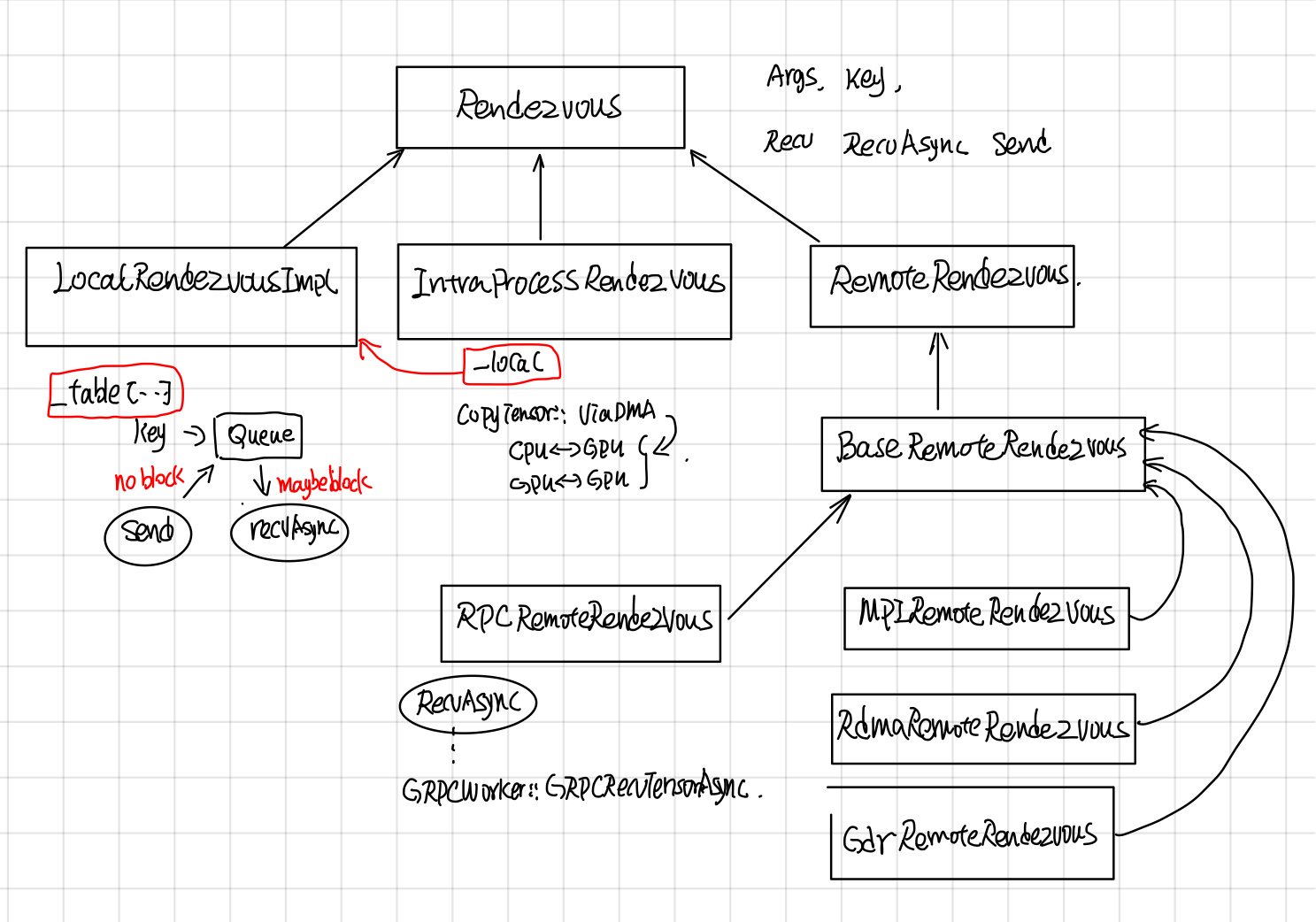

Rendezvous

继承关系

Rendezvous中各个层级实现功能如下:

- LocalRendezvor实现了核心Send和Recv操作,每个key对应了一个queue, send根据key放到相应的队列里,recv根据key去对应的队列取。

- IntraProcessRendezvou使用CopyTensor::ViaDMA处理了不同device间的copy问题,其send, recv还是会交由LocalRendezvous去做。

- RpcProcessRendezvous实现了将woker的本地tensor(tensor如果在GPU上的话,需要先从GPu上copy到内存中)通过grpc buffer传递给调用者。

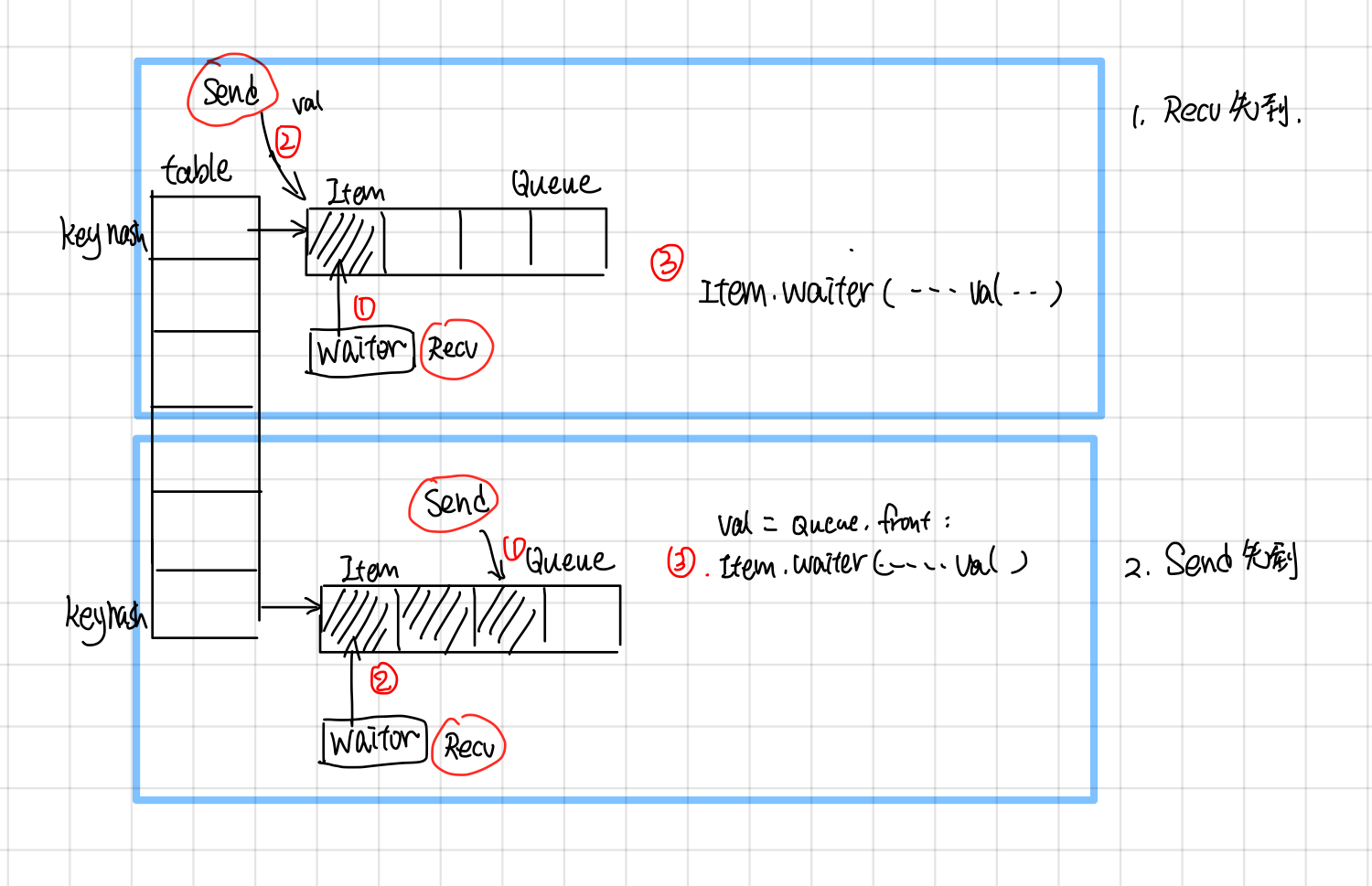

LocalRendezvous: Send and Recv

LocalRendezvous 实现了send和recv最基本的操作,按照send请求和recv请求顺序做了不同的处理:

-

如果recv先到,就新创建一个item,把recv请求放到queue里面,等待send tensor抵达的时候,调用item.waiter回调函数通知recv, tensor已经到了。

-

如果send先到,就新创建一个item, 把item放到queue里面,等recv请求到达的时候,从队列中取出最开头的一个,调用recv.waiter回调函数,通知tensor已经到了。这里send请求就是简单的把tensor放入key对应的队列中,并不会block住。

IntraProcessRendezvous

IntraProcessRendezvous 用于处理进程内的通信, 他的send和recv是委托给LocalRendezvous, 在Local的RecvAsync的回调函数中,它会调用SameWokerRecvDone, 使用CopyTensor::ViaDMA处理跨device通信问题。

void IntraProcessRendezvous::SameWorkerRecvDone(...)

//other code ...

//case 1:都在内存中,直接用使用tensor的operator=

if (src_host && dst_host) {

*out = in;

done(Status::OK());

return;

}

//other code ...

//case 2: 使用ViaDMA处理不同device之间的tensor通信

CopyTensor::ViaDMA(parsed.edge_name, send_args.device_context,

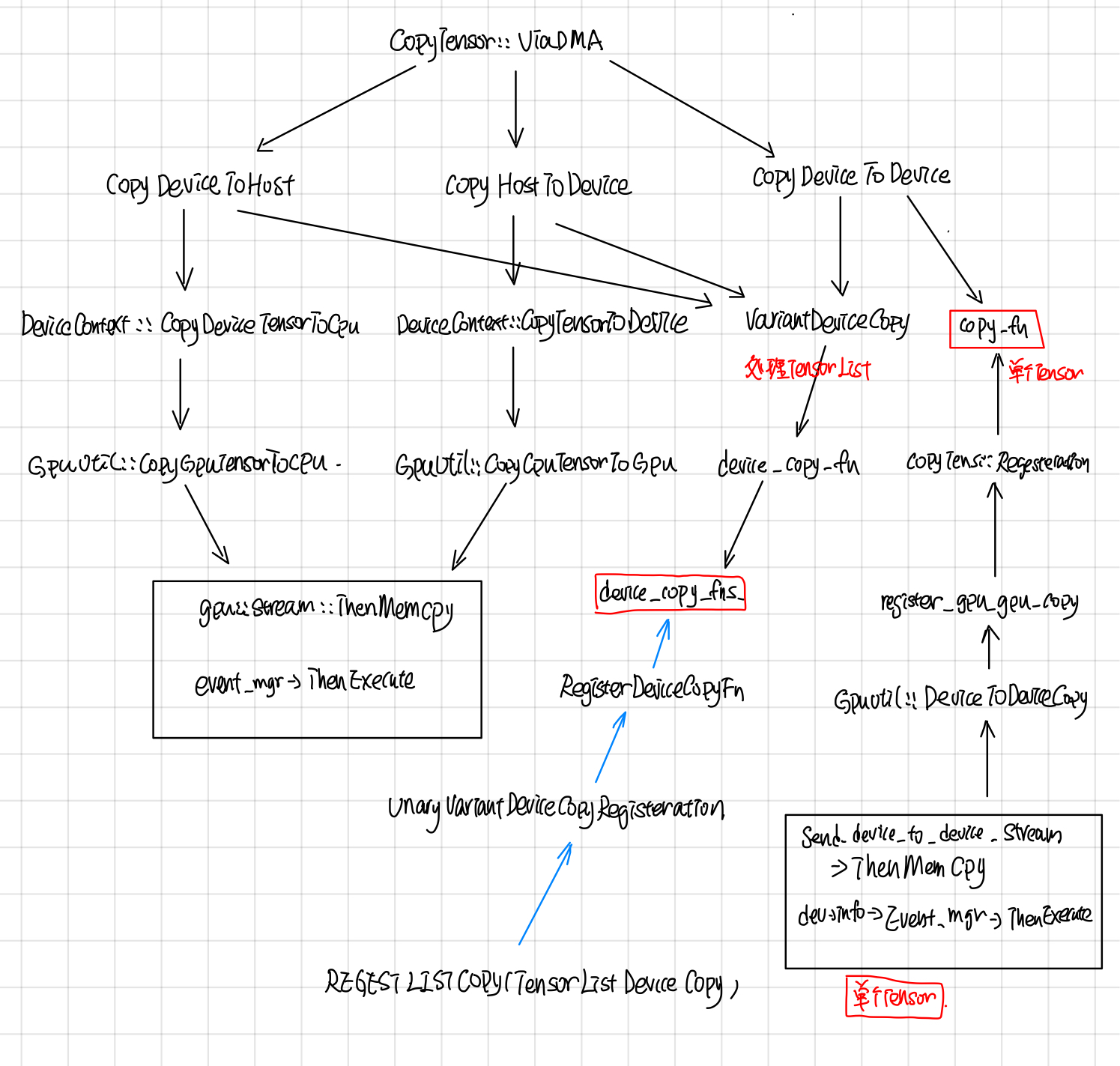

CopyTensor::ViaDMA

CopyTensor::ViaDMA处理了device之间的copy tensor。 Tensor的copy有3个方向:

- HOST_TO_DEVICE

- DEVICE_TO_HOST

- DEVICE_TO_DEVICE

从下图可以看出这些操作最终调用的还是stream_executor的ThenMemcpy所封装的函数。

VarientDeviceCopy这个处理数据是DT_VARIENT结构的Tensor的,最后调用的是TensorListeDeviceCopy函数,这个函数所对应的deviceCopyFn就是stream_executor所封装的Memcpy, 这里的VarientDeviceCopy和copyfn都采用了static registor的模式(这种模式在tensorflow中用的非常多)。

static Status TensorListDeviceCopy(

const TensorList& from, TensorList* to,

const UnaryVariantOpRegistry::AsyncTensorDeviceCopyFn& copy) {

to->element_shape = from.element_shape;

to->element_dtype = from.element_dtype;

to->tensors.reserve(from.tensors.size());

for (const Tensor& t : from.tensors) {

Tensor tmp(t.dtype());

TF_RETURN_IF_ERROR(copy(t, &tmp));

to->tensors.push_back(tmp);

}

return Status::OK();

}

BaseRemoteRendezvous

BaseRemoteRendezvous 的RecvAsync中会检查是否是同一个recv 和sender是否在同一个worker上。

// 检查是否是同一个worker

bool BaseRemoteRendezvous::IsSameWorker(DeviceNameUtils::ParsedName src,

DeviceNameUtils::ParsedName dst) {

return DeviceNameUtils::IsSameAddressSpace(src, dst);

}

如果是同一个worker的话就采用类似IntraProcessRendezvous方式来处理,否则需要通过远程调RecvFromRemoteAsync。

void BaseRemoteRendezvous::RecvAsync(const ParsedKey& parsed,

//other code ..

//case1: 是同一个worker, 说明在本地上

if (IsSameWorker(parsed.src, parsed.dst)) {

local_->RecvAsync(

parsed, recv_args,

[this, parsed, done](

//other code ...

//in recv done callback

SameWorkerRecvDone(parsed, send_args, recv_args, in, out,

} else {

//case2: 不是同一个worker需要用RPC 去取。

RecvFromRemoteAsync(parsed, recv_args, std::move(done));

}

RemoteRendezvous中加了个一个Initialize的接口, 这样绑定了一个WorkerSession, 然后在SameWorkerRecvDone的时候,通过这个workerSession去找到对应的device。

Status BaseRemoteRendezvous::Initialize(WorkerSession* session) {

//other codes...

}

在SameWorkerRecvDone中通过workerSession找到src_device和dst_device

void BaseRemoteRendezvous::SameWorkerRecvDone(

//other code ...

Status s = sess->device_mgr->LookupDevice(parsed.src_device, &src_device);

//other code ...

s = sess->device_mgr->LookupDevice(parsed.dst_device, &dst_device);

//other code ..

//通过ViaDMA实现各个device之间的copy

CopyTensor::ViaDMA(parsed.edge_name, send_args.device_context,

RpcRemoteRendezvous

RpcRemoteRendezvous在BaseRemoteRendezvous的基础上,实现了RecvFromeRemoteAsync的功能, 首先找到send所在的src_worker, 然后通过rpc调用去取的远程src_worker上的tensor。

void RpcRemoteRendezvous::RecvFromRemoteAsync(

//other code..

RpcRecvTensorCall* call = get_call_freelist()->New();

//1. 找到远程的src_worker

WorkerSession* sess = session();

WorkerInterface* rwi = sess->worker_cache->CreateWorker(call->src_worker_);

//2. 找到要copy到的device

s = sess->device_mgr->LookupDevice(parsed.dst_device, &dst_device);

//other code ..

//3. Grpc call

call->Init(rwi, step_id_, parsed.FullKey(), recv_args.alloc_attrs, dst_device,

recv_args, std::move(done));

call->Start([this, call]() {

//other code ..

在RpcRecvTensorCall中会call worker的RecvTensorAsync。

void StartRTCall(std::function<void()> recv_done) {

//other code

wi_->RecvTensorAsync(&opts_, &req_, &resp_, std::move(cb));

}

中间经过worker service,最终会去call GrpcWorker::GrpcRecvTensorAsync.

void GrpcWorker::GrpcRecvTensorAsync(CallOptions* opts,

// Case 1: 如果目标tensor在GPU上的话,需要先cp到host上

if (src_dev->tensorflow_gpu_device_info() && (!on_host)) {

StatusCallback copy_ready = [response, done, copy, is_dead](const Status& s) {

//other code ..

// copy到response buffer中

grpc::EncodeTensorToByteBuffer(is_dead, *copy, response);

done(s);

}

GPUUtil::CopyGPUTensorToCPU(src_dev, send_dev_context, &val, copy, copy_ready);

} else {

//Case 2: 在Host上直接cp到response的buffer中。

grpc::EncodeTensorToByteBuffer(is_dead, val, response);

done(Status::OK());

}

}

RendezvousMgr

RendezvousMgr的作用是维护一个从step_id到Rendezvous的映射。

RendezvousMgr keeps track of a set of local rendezvous instances. All tensors sent by this worker are buffered in a RendezvousMgr until the tensor is received. Each global unique "step_id" corresponds to one local rendezvous instance managed by a RendezvousMgr.

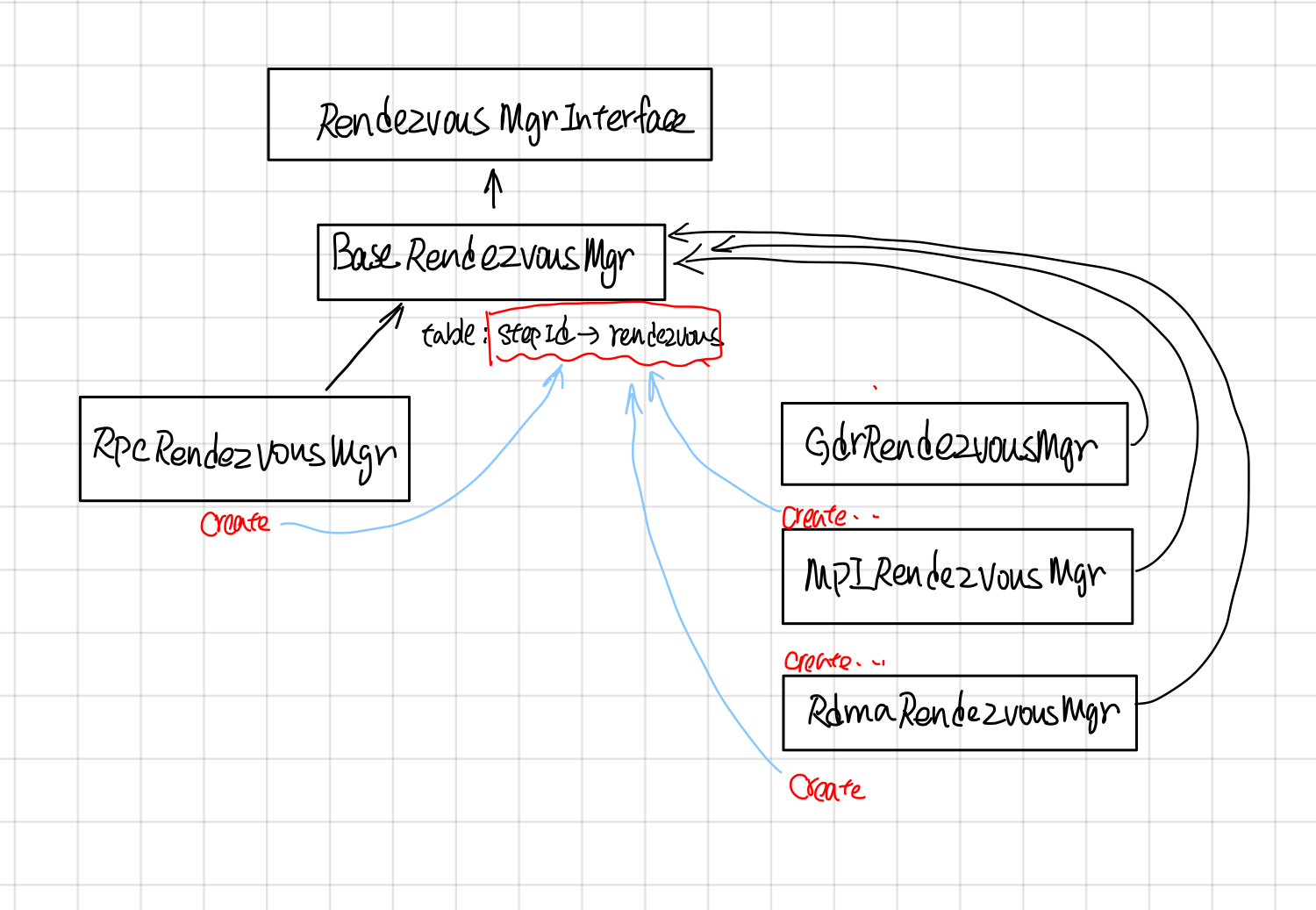

RendezvousMgr的继承关系如下

映射的table在BaseRendezvousMgr中。

//BaseRendezvousMgr的数据成员

typedef gtl::FlatMap<int64, BaseRemoteRendezvous*> Table;

mutex mu_;

Table table_ GUARDED_BY(mu_);

它的派生类比如RpcRendezvousMgr通过override它的Create函数来创建自己版本的rendezvous。

//BaseRendezvousMgr 的CreateRendezvous的纯虚函数

protected:

virtual BaseRemoteRendezvous* Create(int64 step_id,

const WorkerEnv* worker_env) = 0;

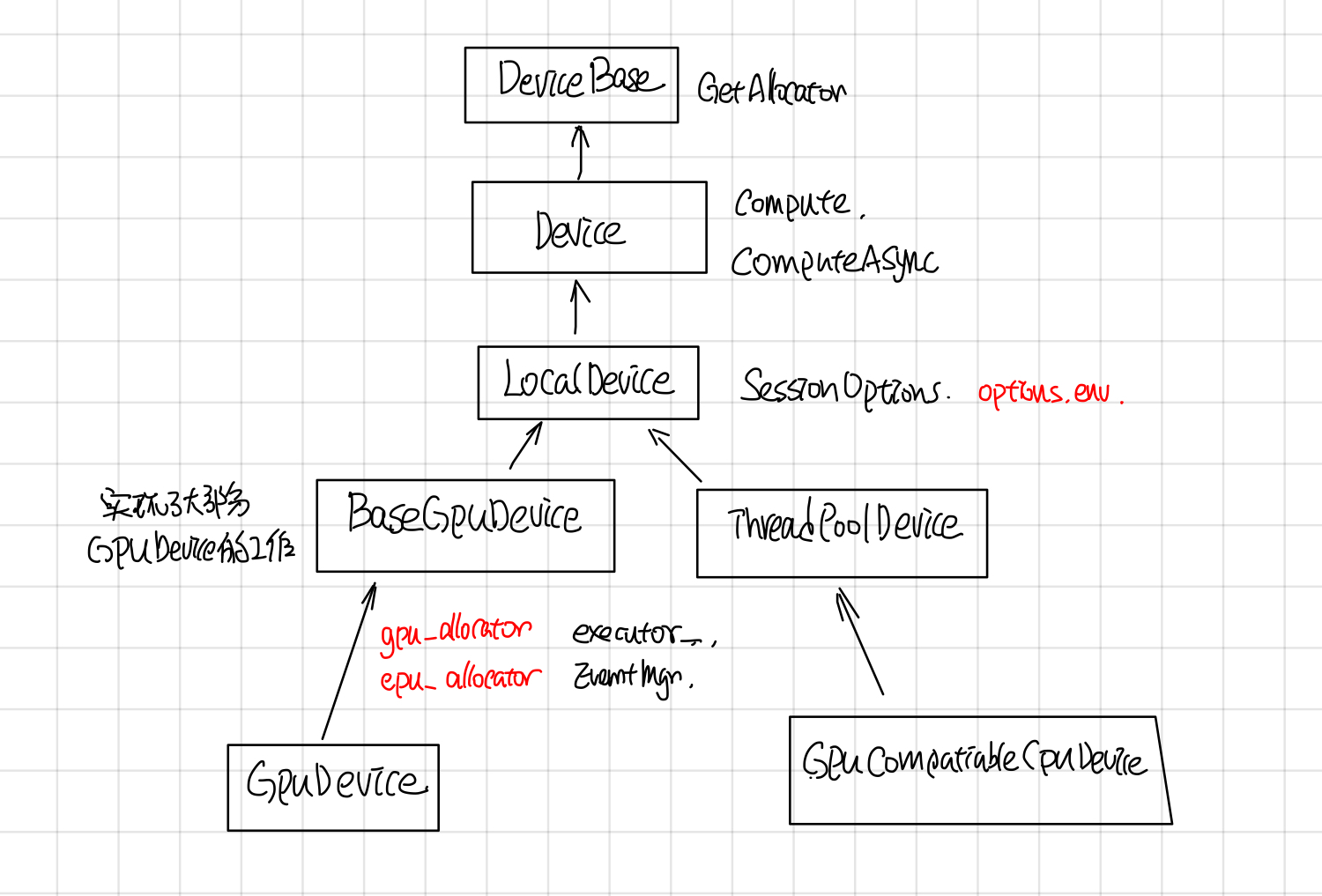

Tensorflow Device

摘要

Device包含了自己的memory的计算单元,它是对GPU, TPU, CPU等计算device统一抽象,主要的接口有以下几个:

- GetAllocator: 这个返回一个allocator,负责在device上分配memory

- Compute,ComputeAsync: 负责执行OpKernel中的运算。

- ResourceMgr: 负责管理分配在Device上的Variable

- tensorflow device thread pool: 调度执行device compute的线程池。

其中1,2最重要,分别负责allocate memory和执行opkernel的compute。

Device

Device的继承关系

Device thread pool

Gpu对应的线程池创建有三种模式:global, gpu_private, gpu_shared,由环境变量TF_GPU_THREAD_MODE控制, 默认是global的。

- global: GPU uses threads shared with CPU in the main compute, thread-pool. This is currently the default.

- gpu_private: GPU uses threads dedicated to this device.

- gpu_shared: All GPUs share a dedicated thread pool.

在DirectSession::Ruinternal调用executor的时候,会把device_thread_pool 传给Executor

// DirectSession::RunInternal

thread::ThreadPool* device_thread_pool =

item.device->tensorflow_device_thread_pool();

if (!device_thread_pool) {

args.runner = default_runner;

} else {

args.runner = [this, device_thread_pool](Executor::Args::Closure c) {

SchedClosure(device_thread_pool, std::move(c));

};

}

item.executor->RunAsync(args, barrier->Get());

}

在分布式tensorflow中,GraphMgr::StartParallelExecutors, 通过类似的方法吧device_thread_pool 传给executor。

//GraphMgr::StartParallelExecutors

thread::ThreadPool* device_thread_pool =

item.device->tensorflow_device_thread_pool();

if (!device_thread_pool) {

args.runner = default_runner;

} else {

args.runner = [this, device_thread_pool](Executor::Args::Closure c) {

SchedClosure(device_thread_pool, std::move(c));

};

}

item.executor->RunAsync(args, barrier->Get());

}

在Executor::schedulReady中,会使用这个runner去执行node的process。

// Executor::ScheduleReady

//Case 1

//other code and

// Schedule to run all the ready ops in thread pool.

runner_([=]() { Process(tagged_node, scheduled_usec); });

//other code and if...

// Dispatch to another thread since there is plenty of work to

// do for this thread.

runner_(std::bind(&ExecutorState::Process, this, *curr_expensive_node, scheduled_usec));

//other code under some if ...

// There are inline nodes to run already. We dispatch this expensive

// node to other thread.

runner_(std::bind(&ExecutorState::Process, this, *curr_expensive_node, scheduled_usec));

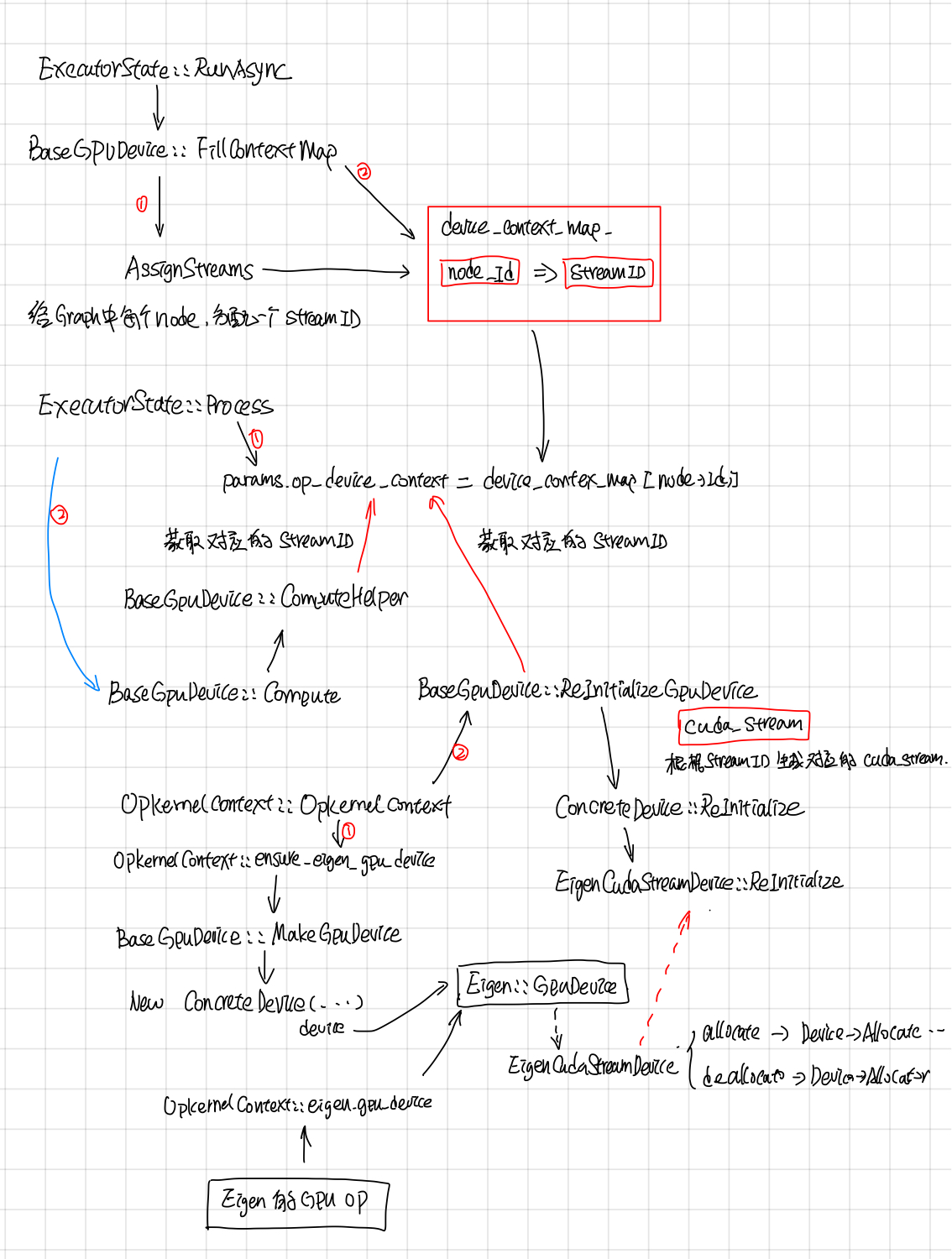

Device Context

GpuDeviceContext有点复杂,有不少的代码逻辑是用来处理一个GPU 启动了多个streams的,graph中的每个node会分配一个stream_id。

device context map

每个node对应OpKernel的device_context会使用这个stream_i来CopyCpuTensorToDevice, CopyDeviceTensorToCpu, 在Compute的时候,opkernel的计算也会这个stream_id对应的stream上执行。

不过现在好玩的是现在BaseGPuDevice的构造函数中max_stream传的值为1,使用多个stream的特性没开,大家用的是同一个stream,在stackflow上搜到了一个为啥这么做的回答:

Yeah, you are looking at code that is a bit stale; we've tried experimenting with multiple compute streams and have found that, so far, it hasn't helped that much in important use cases. We technically support multiple streams, but we never turn it on.

At some point in the future, we want to start playing around with using multiple compute streams again though, so it's nice to have the code there.

Devices can use as many DeviceContexts as they want; on GPU we only use a few and we use FillContextMap to do the mapping, but it really depends on the hardware how they should be used

目前这个特性是实验性的,在重要的use cases中没起到重要的作用,所以这个特性没开, 后续可能会开,所以这部分代码保留了。

除此之外,还在stream_id的基础上做了一个EigenDevice,估计是给Eigen计算提供的吧。无论怎样,DeviceContext给每个Opkernel包了stream_id,然后在执行的时候,会找到这个stream_id对应的cuda_stream。

Eigen::GpuDevice

给Eigen::GpuDevice封装了一个EigenCudaStreamDevice, 用来给Eigen::GpuDevice allocate和deallocate memroy, 具体的怎么用的估计要去挖Eigen的代码了, 还有scratch buffer的作用也不是很明白。

class EigenCudaStreamDevice : public ::Eigen::StreamInterface

// allocate

void* allocate(size_t num_bytes) const override{

//使用device的allocate进行内存分配

}

//deallocate

void deallocate(void* buffer) const override {

//异步的AsyncFreeData,最终调用的是Device的allocate去free内存

}

Compute

Gpu的Compute部分主要有BaseGpuDevice::ComputeHelper来处理,主要是如果gpu使用了多个stream特性的话,需要等待input的stream都完成之后,再执行op对应的stream。

void BaseGPUDevice::ComputeHelper(OpKernel* op_kernel,

//如果是多个stream,需要等待所有input的stream执行完毕。

if (num_streams > 1) {

// If this op's device context is different from the other contexts,

// we must wait on the stream.

for (int i = 0; i < context->num_inputs(); ++i) {

const GPUDeviceContext* idc =

static_cast<GPUDeviceContext*>(context->input_device_context(i));

//other code: 主要是log

if (idc->stream() != stream) stream->ThenWaitFor(idc->stream());

}

gpu::cuda::ScopedActivateExecutorContext scoped_activation{stream->parent()};

op_kernel->Compute(context);

//other code: 主要是cuda执行状态检查

Device Factory

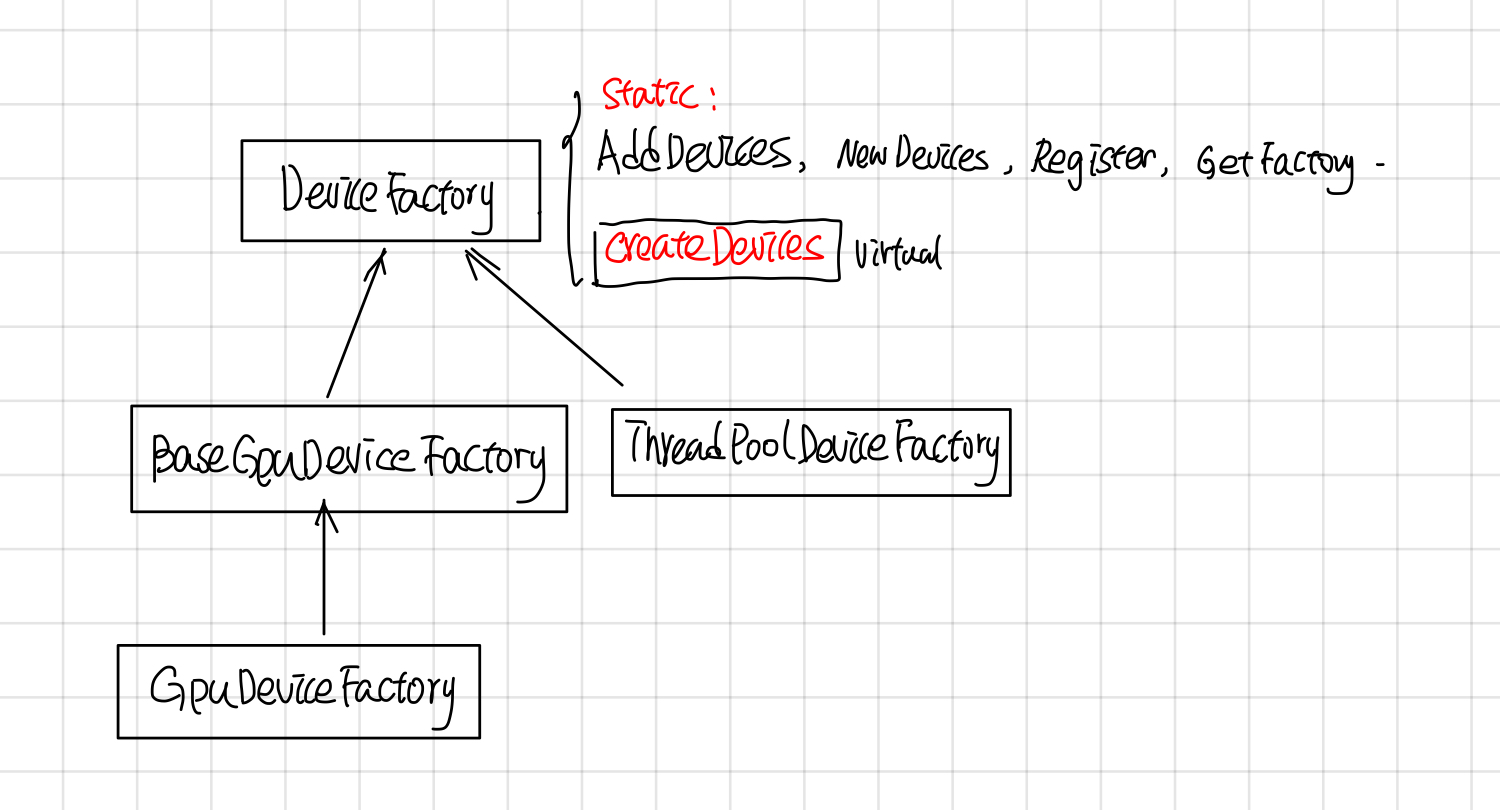

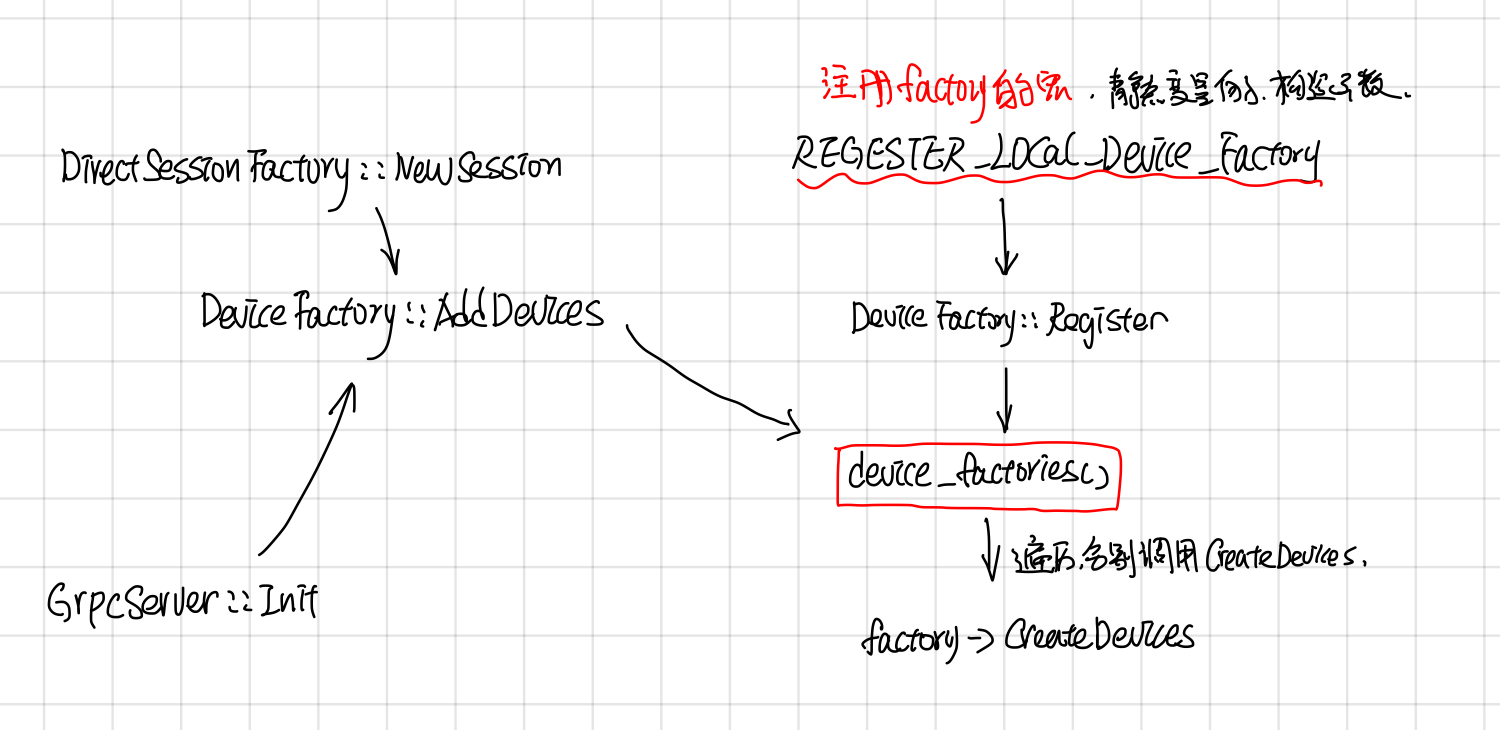

DeviceFactory的继承关系如下:

DeviceFactory包含了一些静态函数: AddDevices, NewDevices, Register, GetFactory, 和一个virutal CreateDevices。 NewDevices用于自动化测试,对外主要接口是AddDevices, Register负责device factory的注册, 这两者的调用关系如下:

DeviceFactory也采用了static registor的方法,自动注册了DeviceFactory,

//device_type, DeviceFactoryClass, Prority

REGISTER_LOCAL_DEVICE_FACTORY("CPU", ThreadPoolDeviceFactory, 60);

REGISTER_LOCAL_DEVICE_FACTORY("CPU", GPUCompatibleCPUDeviceFactory, 70);

REGISTER_LOCAL_DEVICE_FACTORY("GPU", GPUDeviceFactory, 210);

这个宏展开后是声明了一个Registrar的 static var, 在它的构造函数中会去调用DeviceFactory的Register注册Factory, 而Register函数最后会把Factory 加入到static device_factories中。

template <class Factory>

class Registrar {

public:

explicit Registrar(const string& device_type, int priority = 50) {

DeviceFactory::Register(device_type, new Factory(), priority);

}

}

在创建一个DirectSesion, 或者GrpServer::Init(每个worker都会起一个GrpcServer)的时候,会调用AddDevices获取worker上的devices.

tensorflow model optimize

将keras模型导出为tf frozen graph

frozen keras model

将keras的h5文件转换为tensorflow的pb文件, 这里面使用了 convert_variables_to_constants将模型中的变量都convert成了常量(方便后续采用quantilize或者tensorrt, 对模型推断部分做进一步的优化)

import keras

from keras.layers.core import K

import tensorflow as tf

def frozen_keras_model(keras_model_path, output_node_names, export_path):

output_node_namess = output_nodes.split(",")

model = keras.models.load_model(keras_model_path)

print("the model output nodes is {}".format(model.outputs))

with K.get_session() as sess:

output_graph_def = tf.graph_util.convert_variables_to_constants(

sess,

tf.get_default_graph().as_graph_def(),

output_nodes_names,

variable_names_blacklist=['global_step']

)

with tf.gfile.Gfile(export_path, "wb") as f:

f.write(output_graph_def.SerializeToString())

将global_step放到variable_names_blacklist是因为2中的bug.

variable_names_blacklist=['global_step']

可以通过print model.outputs来查看keras的输出节点,可以通过tensorboard来看keras模型,然后找到最后的输出节点。一般keras模型的输出节点有好多个(比如训练用的之类的),预测输出节点为其中的一个。

使用tensorboard展示keras model对应的graph

首先使用tf summary创建相应的log

def keras_model_graph(keras_model_path, log_dir):

model = keras.model.load_model(keras_model_path)

with K.get_session() as sess:

train_writer = tf.summary.FileWriter(log_dir)

train_writer.add_graph(sess.graph)

启动tensorboard

$tensorboard --log_dir logdir

参考文献

使用dataset iterator 优化keras model预测的吞吐量

predict_on_generator

现在做的项目,需要在短时间内一次性预测一组大量的图片,刚开始的时候,采用了keras的predict_on_generator和Sequnce,速度比一个个feed dict的形式快了不少, 但是吞吐量还是没达到要求,感觉还有优化的地方。

class BatchSequnce(Sequence):

def __len__(self):

# 返回batch总个数

return self.batch_count

def __getitem__(self, idx):

#返回一个batch的数据

#这里可能会做一些数据预处理的工作,比如将图片从文件中加载到内存中然后做特征预处理

pass

model = keras.load_model(model_path)

generator = BatchSequnce(....)

ret = model.predict_generator(

generator=generator,

steps=None,

workers=10,

verbose=True,

)

Dataset

经分析, GPU每次都要等 BatchSequnce的__getitem___处理完之后,才能fetch到数据,如果__getitem__做了比较耗时间的操作的话,会让GPU一直在等待, 而且GPU在处理每个Batch数据的时候,都要等一次, tensorflow的Prefech感觉可以缓解这个问题,后来尝试了下,所消耗的时间优化到了以前的70%左右。

使用iterator 改造keras模型

-

首先采用将keras模型导出为tf frozen graph中的方式,将Keras的h5模型转换成tensorflow的pb文件。

-

使用

tf.data.Iterator.from_structure(可重新初始化迭代器可以通过多个不同的 Dataset 对象进行初始)的形式, 声明iterator的输出dtype和TensorShape, -

调用

tf.import_graph_def导入模型, 导入的时候,使用input_map将placeholde,比如"input"替换成Dataset的itereator next_element

这部分代码如下

def load_model(self, sess, frozen_model_file):

with tf.name_scope("dataset"):

iterator = tf.data.Iterator.from_structure(

tf.float32,

tf.TensorShape([self.batch_size, 450, 450, 3]))

next_element = iterator.get_next()

next_element = tf.convert_to_tensor(next_element, tf.float32)

with tf.gfile.GFile(frozen_model_file, "rb") as f:

graph_def = tf.GraphDef()

graph_def.ParseFromString(f.read())

tf.import_graph_def(

graph_def,

name="",

input_map={"input_1:0": next_element})

output_op_name = "y"

output_op = sess.graph.get_operation_by_name(output_op_name).outputs[0]

return iterator, output_op

设计DataSet

这里面需要注意的时候, 真正的map函数需要采用py_func包一层, 同事指定py_func的输出tensor shape, 这里的num_map_parall一般取cpu的个数.

class DataSetFactory(object):

def make_dataset(self):

def generator():

#返回要处理的文件路径, 或者坐标等

yield [x, y, w, h]

output_types = (tf.float32)

output_shapes = (tf.TensorShape([4]))

ds = tf.data.Dataset.from_generator(

generator,

output_types,

output_shapes=output_shapes)

ds = ds.map(lambda region: self.map_func(region), num_map_parall=80)

ds = ds.prefetch(buffer_size=self.batch_size * 256)

ds = ds.batch(self.batch_size)

ds = ds.prefetch(buffer_size=self.batch_size * 10)

return ds

def map_func(self, region):

def do_map(region):

# 加载图片和预处理

return img_data

# 这里采用了py_func,可以执行任意的Python函数,同时需要后面通过reshape的方式设置

# image_data的shape。

img_data = tf.py_func(do_map, [region], [tf.float64])

img_data = tf.reshape(img_data, [450, 450, 3])

img_data = tf.cast(img_data, tf.float32)

return image_data

prefetch_to_device

tensorflow 后来加了prefetch_to_device, 经测试可以提高5%左右的效率吧,但是和structure iterator初始化的时候有冲突,因此这个地方把它去掉了。

# 由于prefech_to_device必须是dataset的最后一个处理单元,

# structure iterator用这个ds初始化的时候会有问题,

# 因此这个地方将prefetch_to_gpu注释掉了

# gpu_prefetch = tf.contrib.data.prefetch_to_device(

# "/device:GPU:0",

# buffer_size=self.batch_size * 10)

# ds = ds.apply(gpu_prefetch)

使用dataset初始化iterator

def init_iterator(self, dataset):

# 这里的output_op就是load_model时返回的iterator

init_iterator_op = self.iterator.make_initializer(dataset)

self.sess.run(init_iterator_op)

def predict(self):

# 这里的output_op就是load_model时返回的output_op

while True:

outputs = self.sess.run(self.output_op)

统计gpu,cpu利用率脚本

#!/bin/bash

start=$(date +%s)

while [ 1 ]

do

cpu=$(awk -v a="$(awk '/cpu /{print $2+$4,$2+$4+$5}' /proc/stat; sleep 1)" '/cpu /{split(a,b," "); print 100*($2+$4-b[1])/($2+$4+$5-b[2])}' /proc/stat)

seconds=$(expr $(date +%s) - $start)

gpu_util=$(nvidia-smi --query-gpu=utilization.gpu --format=csv,noheader,nounits)

echo "$seconds, $cpu, $gpu_util"

#sleep 1

done

pthread

Pthread primer 笔记

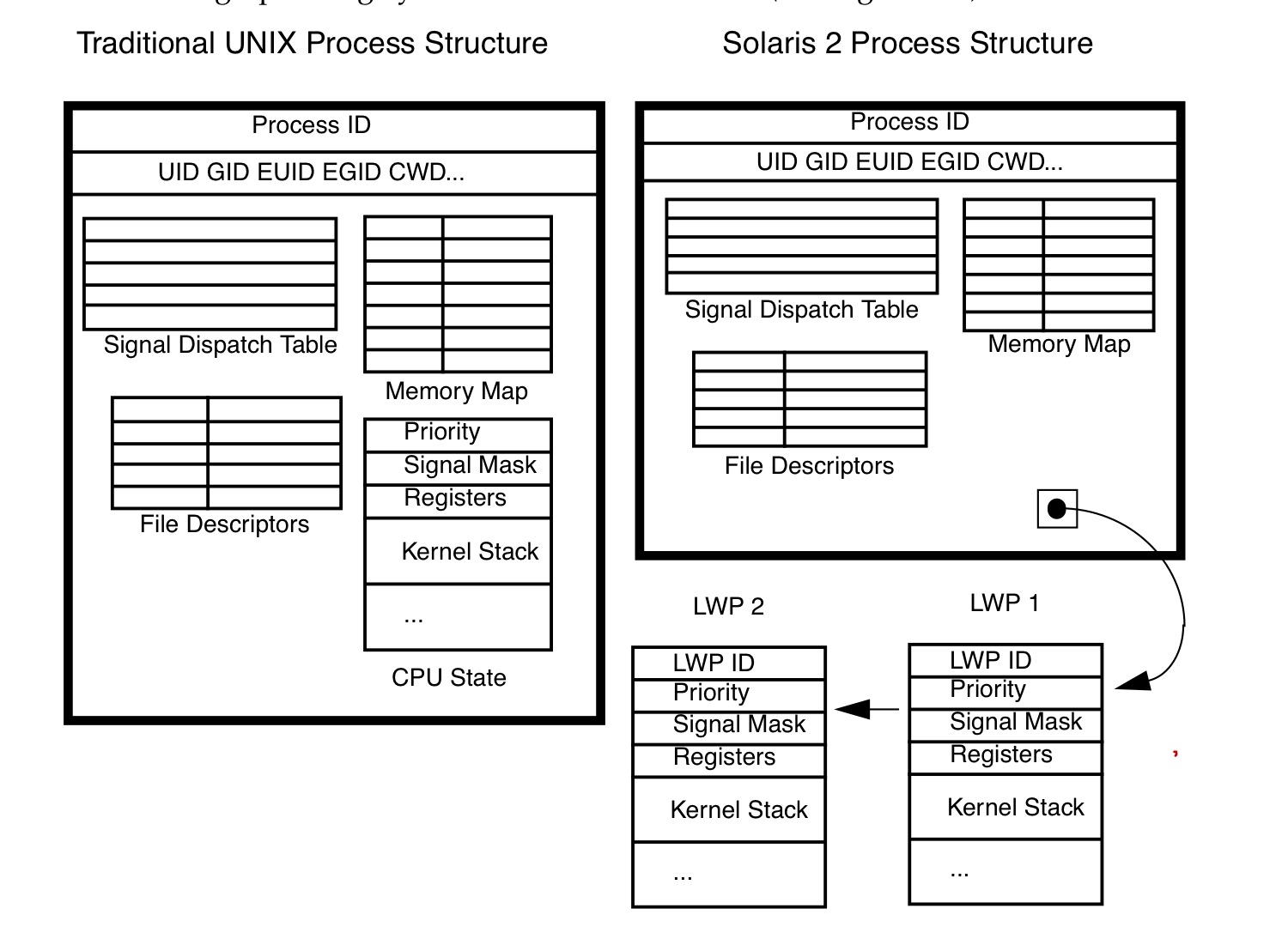

进程和线程

在kernel中process的context

- cpu相关:program counter pointer, stack top pointer, cpu general registers, sates.

- 内存:memory map

- user: uid, gid, euid, egid, cwd.

- 信号: signal dispatch table

- File: file descriptors

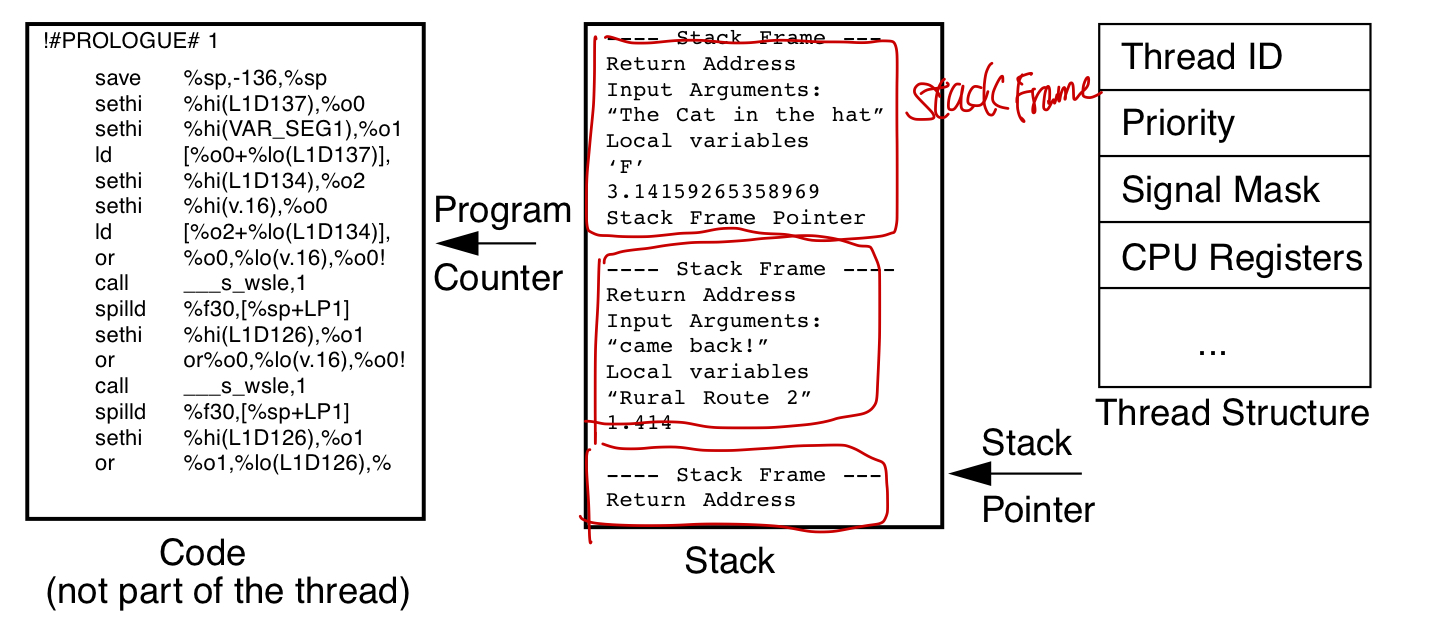

thread的context data

- cpu相关:program counter pointer, stack top pointer, cpu general registers, sates.

- 内存相关: stack

线程的stack是分配在process的heap上的

//设置和获取线程的stack address

include <pthread.h>

int pthread_attr_setstack(pthread_attr_t *attr, void* stackaddr, size_t stacksize);

int pthread_attr_getstack(const pthread_attr_t* attr, void** stackaddr, size_t* stacksize);

整个进程只有一份signal dispatch table

所以signal 中断的时候,说不准会中断到那个thread里面,需要加signal mask来处理。

使用thread的好处

context switch: process的上下文切换比thread的context switch 耗时间.memory share: thread之间的通信,共享process的内存,file等资源比process之间的通信,share内存方便.

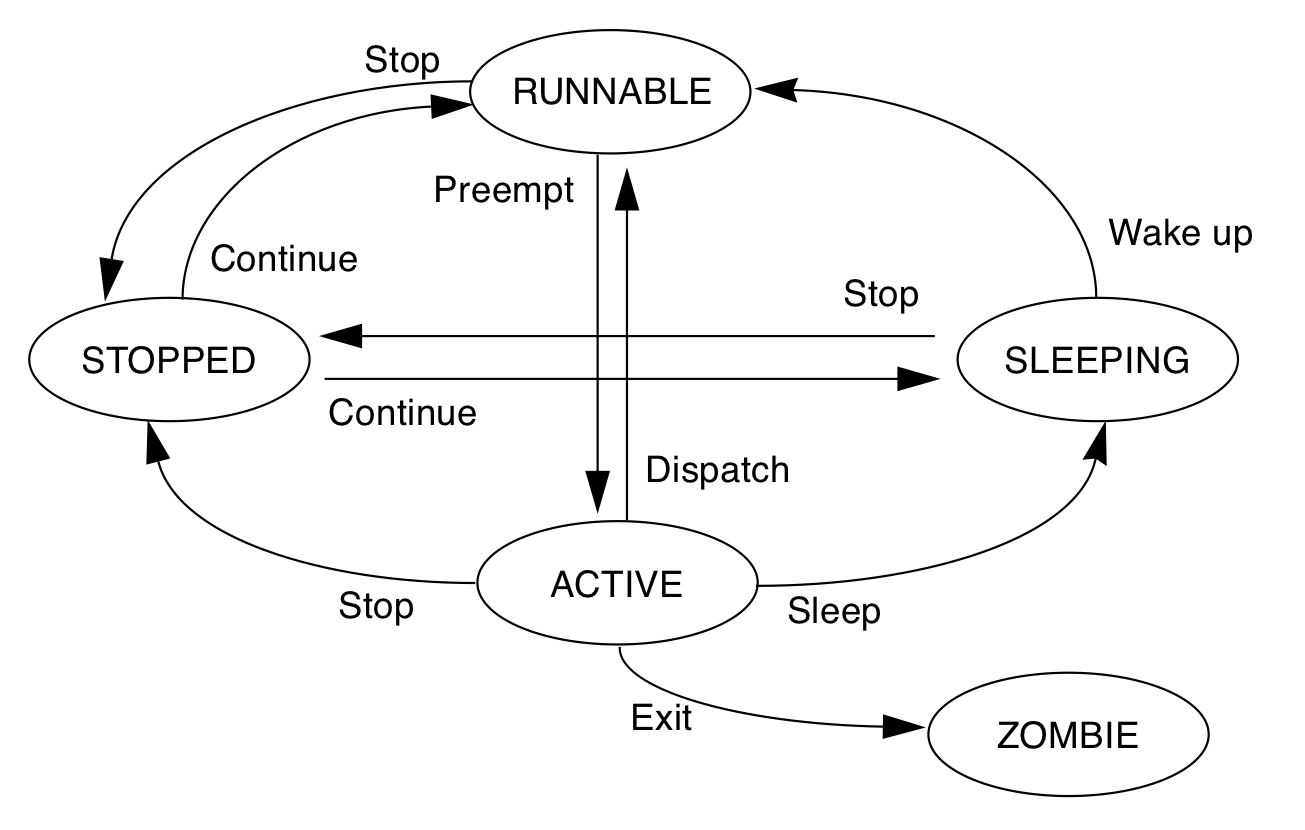

线程调度和生命周期

线程调度

线程有两种调度方式,一种是完全在user space, 由thread库做调度,优点是省了system call 从而省下了从user space 到kernel space的切换, 比较快,缺点是,有一个线程挂在IO上后,整个process都会被挂起.(可以把block的system call 改成nonblock的,使用asyc io来解决这个问题).

另外一种是kernel 实现的light weight process(lwp), lwp避免了整个线程被挂起的缺点,但是需要从user space 到kernel space的切换, 比完全user space实现的线程慢一点。

现实中这两种的实现的方式可以混合起来, 混合方式如下:

- 多个线程对应一个lwp

- 一个线程对应一个lwp

- 多个线程对应多个lwp

在pthread 中可以这么设置调度的属性:

//pthread中设置调度scope

//PTHREAD_SCOPE_SYSTEM 表示system 全局的, PTHREAD_SCOPE_PROCESS 表示process scope的。

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_setscope(&atttr, PTHREAD_SCOPE_SYSTEM);

pthread_create(&tid, &attr, foo, NULL);

影响线程调度的一些属性

- scope: PTHREAD_SCOPE_PROCESS, PTHREAD_SCOPE_GLOBAL

- policy: SCHED_RR, SCHED_FIFO, SCHED_OTHER

- priority

- inheritance

线程状态以及状态之间的迁移关系如下图:

四种running中的线程被切出去的状况

- synchronization 线程require lock的失败被挂在lock的sleep queue上。

- preemption 被抢占了,T1在运行的时候,一个更高优先级的线程T2到了runnable的状态, T1会被T2抢占了。

- yielding. 线程T1主动调用sched_yield, 如果有和T1优先权一样的T2线程,就切换到T2线程,如果没有,T1就接着运行。

- time-slicing. T1的时间片用完了,和T1有同样优先权的T2接着运行。

创建和退出线程

//create

int pthread_create(pthread_t* thread, const pthread_attr_t* attr, void*(* start_routine)(void*), void* arg);

//exit

void pthread_exit(status);

线程的返回值,一种是函数执行结束后,直接return的值,另外一种是pthread_exit(status)这个的返回值。

join: 等待线程执行结束

join之后线程会处于阻塞状态直到等待的线程T1执行完毕,join之后t1线程的相关内存会被清理掉,所以说一个子线程只能被join一次.

设置线程的属性为joinable

pthread_t thread_id;

pthread_attr_t attr;

pthread_attr_init(&attr);

pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_JOINABLE);

pthread_create(&thread_id, &attr, work, (void*)arg);

阻塞等待线程的执行结果,获取线程的返回结果

//等待t1线程执行结束, exit_status 是子线程的返回值.

pthread_join(t1, &exit_status)

joinable线程和detehced线程的区别是线程结束的时候,资源(线程对应的标识符pthread_t, 线程返回信息)该怎么释放.

对于joinable线程t1, 只有当其他线程对t1调用了pthread_join之后, 线程t1才会释放所占用的资源, 否则 会进入类似于进程的zombile状态,这些资源不会被会回收掉.

使用信号量 等待线程执行结束

使用信号量等待一堆子线程执行结束, 在主线程里面调用thread_signle_barrier, 然后子线程结束的时候调用SEM_POST(barrier)

void thread_signle_barrier(sem_t* barrier, int count){

while( count > 0) {

SEM_WAIT(barrier);

count--;

}

}

detach

如果想要t1线程执行结束收系统自动回收t1的资源, 而不是通过调用pthread_join回收资源(会阻塞线程), 我们可以将线程设置为deteched, 有三种方式可以设置线程为deteched.

- 创建线程时指定线程的 detach 属性: pthread_attr_setdetachstate(&attr, PTHREAD_CREATE_DETACHED);

- 通过在子线程中调用 pthread_detach(pthread_self());

- 在主线程中调用 pthread_detach(thread_id);(非阻塞, 执行完会立即会返回)

取消线程的执行

在pthread中可以通过pthread_cancel(t1)来取消线程t1的执行, 这个会设置线程t1的cancel state, 由线程t1在自己在cancel point 检查是否退出线程, 在退出线程的时候会执行cleanup stack中的函数(比如释放自己hold的锁). 一般会block的函数调用,比如sem_wait, pthread_cond_wait或者会block的系统调用前后检查check point.

如下代码段:

void cleanup_lock2(void* arg){

pthread_mutex_unlock((pthread_mutex_t*)arg)

}

void thread1_run(){

pthread_mutex_lock(&answer_lock);

pthread_cleanup_push(cleanup_lock2, (void*)&answer_lock);

while(!firest_thread_to_find_answer) {

pthread_cond_wait(&cvn, &answer_lock);

}

pthread_cleanup_pop(0)

}

也可以通过pthread_setcanceltype设置为异步取消PTHREAD_CANCEL_ASYNCHRONOUS,这样会给t1线程发送SIGCANCEL信号,t1线程在信号处理函数中结束自己的执行。

Signal 信号处理

Linux 多线程应用中,每个线程可以通过调用 pthread_sigmask() 设置本线程的信号掩码, pthread_kill像某个线程发送signal.

signal handler 异步的方式处理信号

多线程处理signal时候需要注意事项

- 信号处理函数尽量只执行简单的操作,譬如只是设置一个外部变量,其它复杂的操作留在信号处理函数之外执行;

- errno 是线程安全,即每个线程有自己的 errno,但不是异步信号安全。如果信号处理函数比较复杂,且调用了可能会改变 errno 值的库函数,必须考虑在信号处理函数开始时保存、结束的时候恢复被中断线程的 errno 值;

- 信号处理函数只能调用可以重入的 C 库函数(只能调用async safe 的函数);譬如不能调用 malloc(),free()以及标准 I/O 库函数等;

- 信号处理函数如果需要访问全局变量,在定义此全局变量时须将其声明为 volatile,以避免编译器不恰当的优化

sigwait, 同步串行方式

等待信号的到来,以串行的方式从信号队列中取出信号进行处理.

void signal_hanlder_thread() {

sigemptyset(&waitset);

sigaddset(&waitset, SIGRTMIN);

sigaddset(&waitset, SIGUSR1);

while (1) {

//串行的方式处理信号

rc = sigwaitinfo(&waitset, &info);

if (rc != -1) {

sig_handler(info.si_signo);

}

}

Thread local storage

TLS是只在线程自己可见的全局数据, 而不必担心别的线程会改变这个全局数据, 比如要实现每个线程对db的connection单例模式的话,可以把线程的全局connection单例变量存在TLS中。 在使用中有两种方式,一个是pthread_key的方式,另外一个是使用gcc提供的__thread.

Thread Specific Data

pthread_keycreate

pthread_setspecific

pthread_getspecific

__thread

__thread是gcc提内置的attr, 它只能用于修饰POD类型,不能修饰class类型,因为它无法自动调用构造函数和析构函数。 __thread每个线程都有一份独立的实体,线程之间相互不影响.

int g_var; // 全局变量

__thread int t_var; //thread变量

线程的同步

atomic 指令

线程执行的时候,在两个指令之间,随时都可能会被抢占掉, 所以需要一个atomic的指令来避免这种状况.

atomic test and set style: ldstub

ldstub (load and store unsigned byte) 就是一个atomic test and set的指令, 从内存中载入一个unsigned字节,并且把内存中那个字节设置为1.

一个mutex lock的实现

try_agin: ldstub address -> register

compare register, 0

branch_equal got_it

call go_to_sleep

jump try_again

got_it: return

从这儿可以看到,线程从go_to_sleep返回之后,需要去重新获取lock, 如果获取失败,就接着go_to_sleep.

basic primitive

所有线程之前shared的数据需要被用lock保护起来,比如全局数据,传入到另外一个线程的Data struct, 还有static数据。

mutex lock(互斥锁)

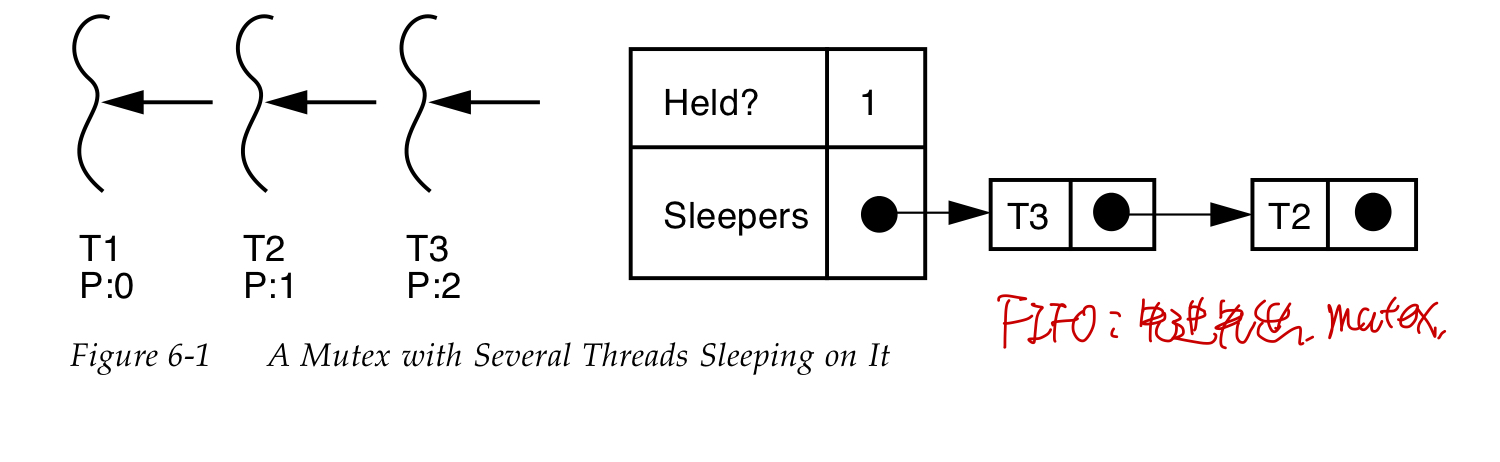

线程获取mutex lock失败以后,会被放到mutex对应的sleep队列中。

pthread_mutex_lock

//critical section

pthread_mutex_unlock

另外一种非阻塞的获取锁的方法pthread_mutex_trylock 如果获取锁成功返回0,否则返回EBUSY.

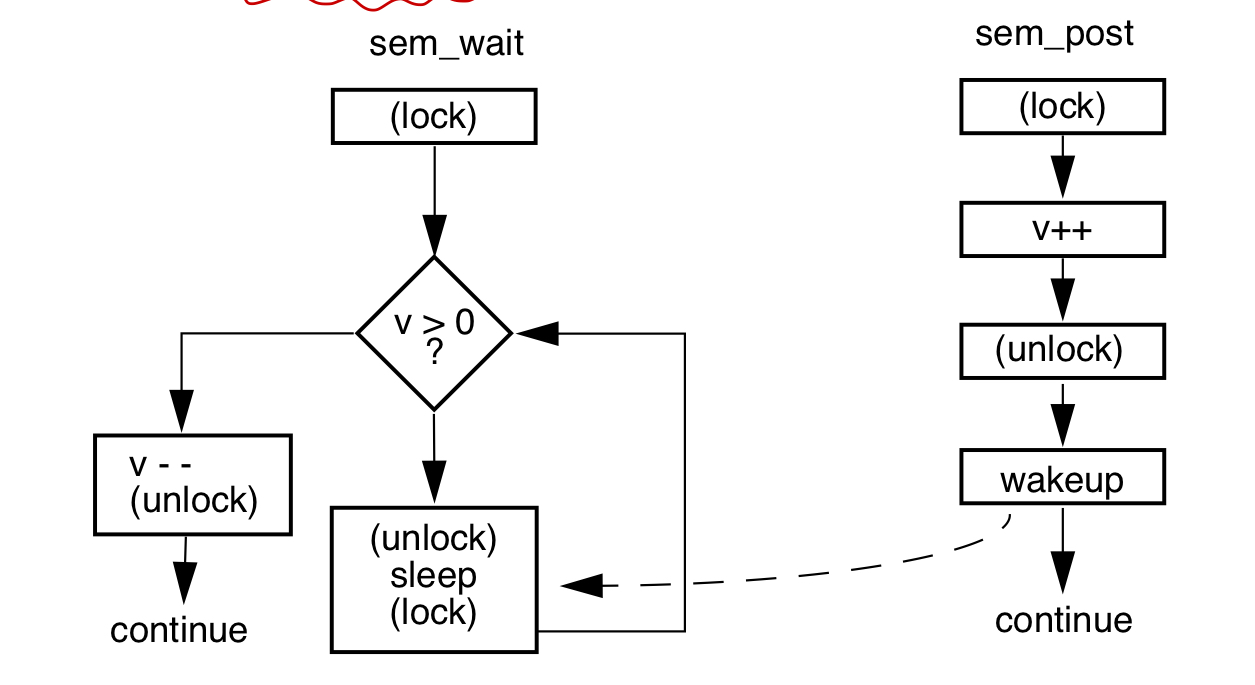

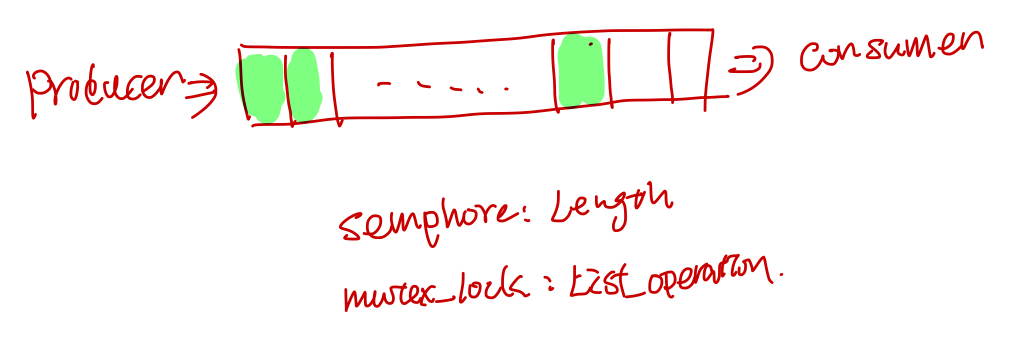

semaphores(信号量)

信号量机制用于协调多个资源的使用(比如一个队列或者缓冲区),semaphores的值表示可用资源的数量(队列中可用资源的个数)。常用于解决生产者和消费者问题.

// 初始化

int sem_init(sem_t *sem, int pshared, unsigned int val);

// 没有可用的信号量就等待,否则

int sem_wait(sem_t *sem);

// 释放一个信号量,信号量的值加1

int sem_post(sem_t *sem);

信号量处理流程

生产者消费者问题, 假设队列的长度是20:

#include <semaphore.h>

//shared global vars

sem_t sem_producer;

sem_t sem_consumer;

//list

void producer(){

while(1){

sem_wait(sem_consumer);

pthread_mutex_lock(list_lock);

add(list);

pthread_mutex_unlock(list_lock);

sem_post(sem_producer);

}

}

void consumer(){

while(1) {

sem_wait(sem_producer);

pthread_mutex_lock(list_lock);

consume(list);

pthread_mutex_unlock(list_lock);

sem_post(sem_consumer);

}

}

void main(){

sem_init(&sem_producer, 0);

sem_init(&sem_consumer, 20);

pthread_t producer_tid;

pthread_t consumer_tid;

pthread_create(&producer_tid, nullptr, producer, nullptr);

pthread_create(&consumer_tid, nullptr, consumer, nullptr);

}

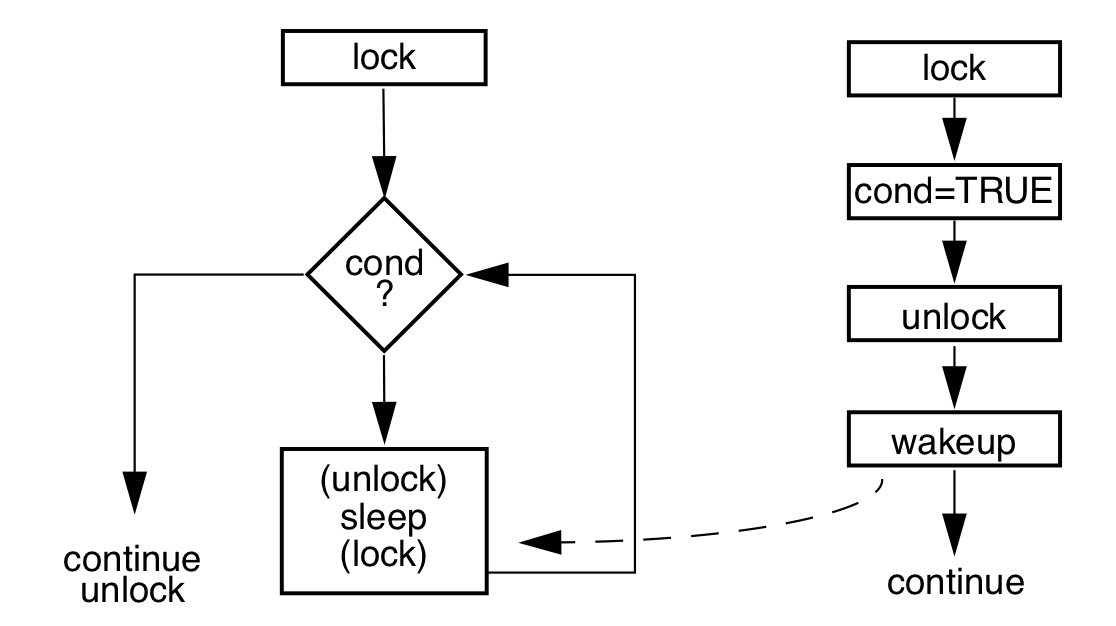

condition var (条件变量)

condition var 的流程, condition var 访问需要用个mutex lock保护起来, condition判断失败之后,会unlock 保护condition var 的lock, 然后进入sleep, 之后被唤醒的时候,会再次去获取condition var的lock。

<code>

// 初始化

pthread_cond_t cond = PTHREAD_COND_INITIALIZER;

// 动态初始化

int pthread_cond_init(pthread_cond_t* restrict cond, const pthread_condattr_t* restrict attr);

//销毁

int pthread_cond_destroy(pthread_cond_t* cond);

//等待

int pthread_cond_wait( pthread_cond_t* restrict cond, pthread_mutex_t* restrict mutex );

int pthread_cond_timedwait( pthread_cond_t* restrict cond, pthread_mutex_t* restrict mutex, const struct timespec* restrict abstime );

// 通知

// singal 函数一次只能唤醒一个线程, 而 broadcast 会唤醒所有在当前条件变量下等待的线程.

int pthread_cond_broadcast(pthread_cond_t* cond);

int pthread_cond_signal(pthread_cond_t* cond);

wait for condition

// safely examine the condition, prevent other threads from

// altering it

pthread_mutex_lock (&lock);

while ( SOME-CONDITION is false)

pthread_cond_wait (&cond, &lock);

// Do whatever you need to do when condition becomes true

do_stuff();

pthread_mutex_unlock (&lock);

signal condition

// ensure we have exclusive access to whathever comprises the condition

pthread_mutex_lock (&lock);

ALTER-CONDITION

// Wakeup at least one of the threads that are waiting on the condition (if any)

pthread_cond_signal (&cond);

// allow others to proceed

pthread_mutex_unlock (&lock)

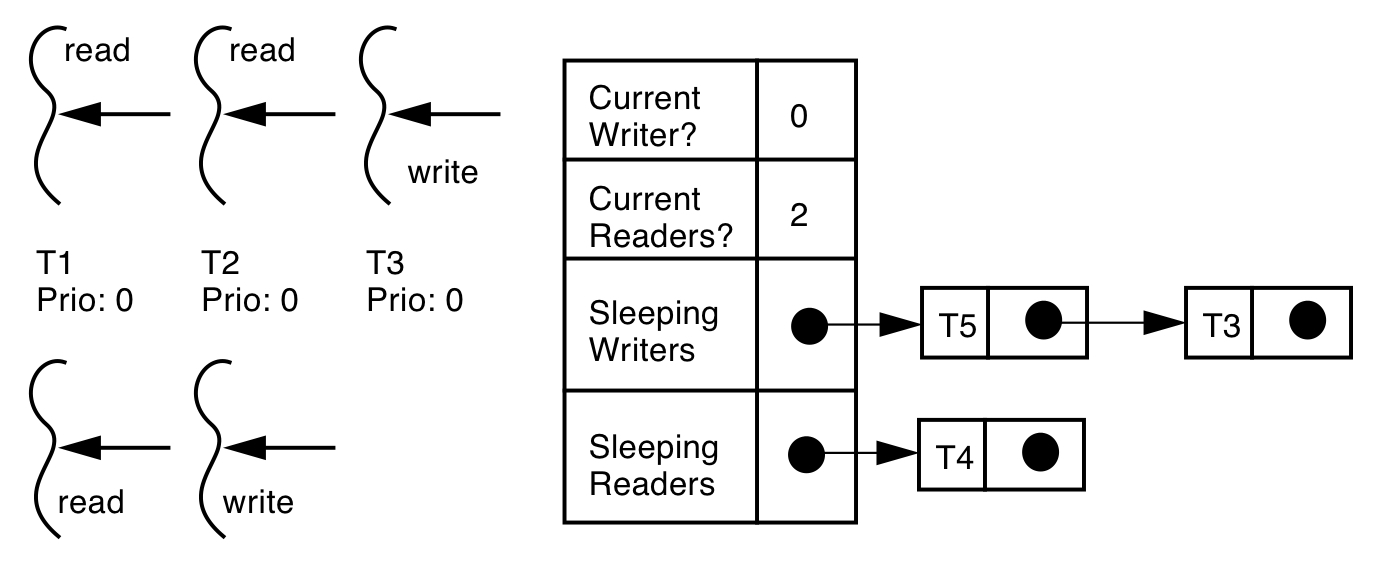

read write lock (读写锁)

在某个时间内,多个线程可以同时获得读锁, 如果已经有线程获得了读锁,那么尝试获取写锁的将被block, 如果已经有线程获取了读锁,那么其他线程的尝试获取读锁或者写锁将会被block.

pthread_rwlock_t rwlock;

int pthread_rwlock_init(pthread_rwlock_t* restrict rwlock, const pthread_rwlockattr_t * restrict attr);

int pthread_rwlock_destroy(pthread_rwlock_t* rwlock);

// 获取读锁

int pthread_rwlock_rdlock(pthread_rwlock_t* rwlock);

// 获取写锁

int pthread_rwlock_wrlock(pthread_rwlock_t* rwlock);

// 释放锁

int pthread_rwlock_unlock(pthread_rwlock_t* rwlock);

Spin lock (自旋锁)

多次trylock, 如果失败了再block, 它的出发点是trylock这个指令的时间很短(比如2us)然后mutex block一次可能需要42us,所以它先尝试几次, 如果在这几us内,lock被释放了,那么能够成功的获取锁了。

spin_lock(mutex_t* m) {

for(int i = 0; i < SPIN_COUNT; i++) {

if (pthread_mutex_trylock(m) != EBUSY) {

return;

}

}

pthread_mutex_lock(m);

return;

}

Adaptive Spin lock

在很多kernel里面使用的,kernel先看拥有锁的线程在不在running(如果在跑的话,那么线程可能短时间内会释放这个锁,所以值得spin几次去尝试下), 如果不在running 状态的话,就直接去require lock了,然后线程会被block.

使用spin lock的时候,需要好好的评估下到底值不值得,就是critical section hold住lock的时间会不会很长。。如果一般很短的话,值得用spin lock,否则的话用spin lock反而浪费时间。

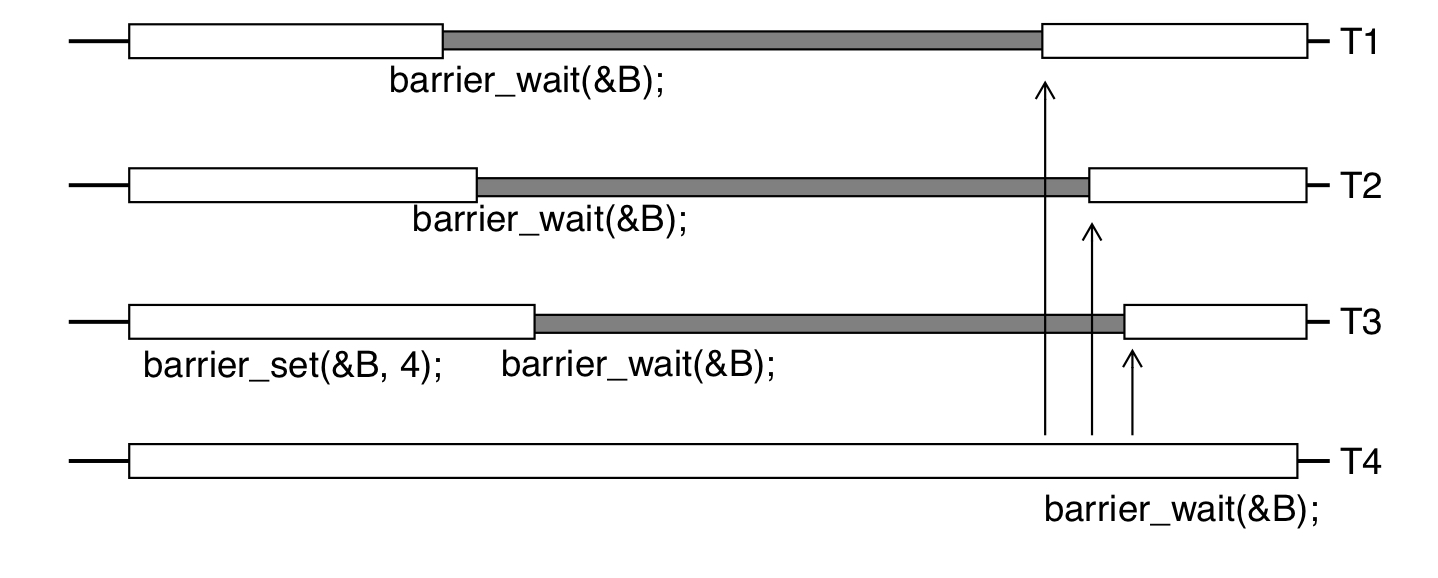

Barriers

pthread_barrier_t mybarrier;

//初始化

pthread_barrier_init(&mybarrier, NULL, THREAD_COUNT + 1);

pthread_barrier_destroy(&mybarrier);

pthread_barrier_wait(&mybarrier);

等待最后一个线程达到barrier点。

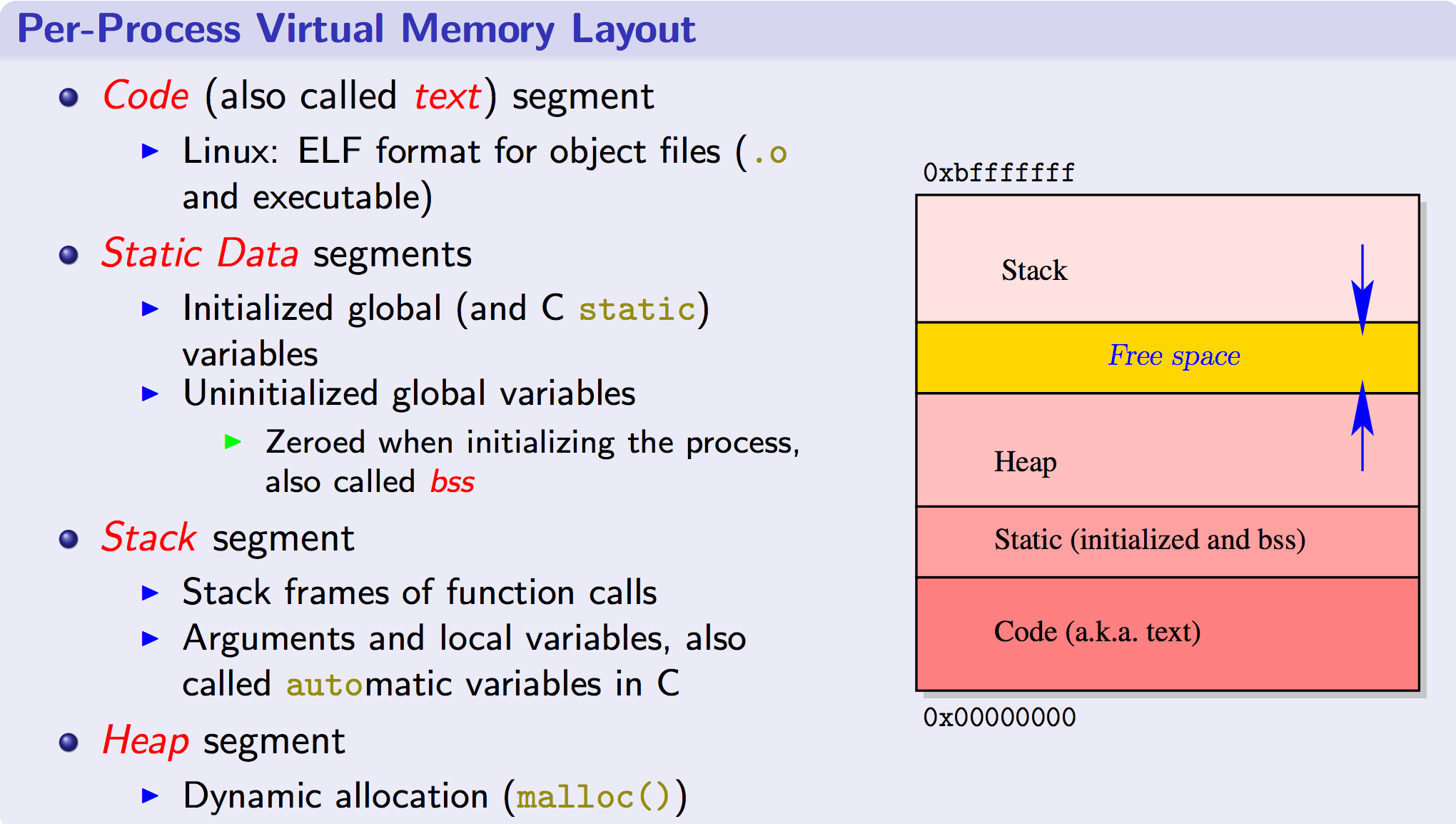

附录

- linux中的process的virutal memory layout 参见Processes and Memory Management

参考

Glibc的pthread实现代码研读 1: 线程的生命周期

本文主要包含pthread线程在linux上的创建,执行,exit, detach, join, cancel, thread local storage。

pthread_t

struct pthread定义在nptl/descr.h中, 这边抽几组主要的field来说明下(这里为了方便描述,对field在struct的顺序做了重新的编排)。

首先是创建完线程之后,系统给的id和各种flag attribute.

/* Flags. Including those copied from the thread attribute. */

int flags;

pid_t tid;

/* Lock to synchronize access to the descriptor. */

int lock;

然后最显而易见的是, 线程要执行的函数指针,函数参数以及函数执行的结果, 这几个字段会在线程的入口start_thread中用到。对于result字段: pthread_join(t1, &status), 这个会等待线程t1执行结束,然后把结果放到status中。

//保存线程返回结果

void *result;

// 线程执行的函数和参数

void *(*start_routine) (void *);

void *arg;

然后一些field用于处理下面这几种异常情况: 线程如果抛异常了,线程调用pthread_exit提前exit了,线程被其它线程pthread_cancel了。

// 线程cancel的状态

int cancelhandling;

// 线程被cancel的时候,处理cleanup callback和cleanup jmp

struct _pthread_cleanup_buffer* cleanup;

struct pthread_unwind_buf* cleanup_jmp_buf;

/* Machine-specific unwind info. */

struct _Unwind_Exception exc;

标明线程是被join的还是已经deteched字段, 这个字段涉及到线程的pthread struct该什么时候释放。

struct pthread* joinid;

#define IS_DETACHED(pd) ((pd)->joinid == (pd))

stack相关的field, 在ALLOCATE_STACK和回收statck的时候会用到,由于pthread的这个struct也是放在stack上的,因此需要一些参数记录pthread的offset, user_statck表示是否是由用户提供的stack。

/* True if the user provided the stack. */

bool user_stack;

void *stackblock;

size_t stackblock_size;

/* Size of the included guard area. */

size_t guardsize;

/* This is what the user specified and what we will report. */

size_t reported_guardsize;

thread specific data相关的字段

// 用于thread specific data, thread local storage

struct pthread_key_data

{

uintptr_t seq;

void* data;

} specific_1stblock[PTHREAD_KEY_2NDLEVEL_SIZE];

struct pthread_key_data* specific[PTHREAD_KEY_1STLEVEL_SIZE];

最后调度策略和调度参数相关的字段,在线程create的时候,会调用sched_setaffinity, sched_setscheduler让系统设置这些参数。

// 调度策略和调度参数

struct sched_param schedparam;

int schedpolicy;

pthread struct 的alloc和free

nptl/allocatestatck.c 中的allocate_stack和__deallocate_stack负责alloc和free pd struct。如果用的是系统分配的stack话, pthread有个stack list,当alloc的时候,从这个stack list中取出一个,然后在free的时候,把这个stack放回到stack list中。

这就导致了一个问题, pthread_t 并不适合作为线程的标识符,比如下面两个线程的pthread_t的地址是一样的(参考自Linux 多线程服务端编程: 4.3节):

int main() {

pthread_t t1, t2;

pthread_create(&t1, NULL, threadFunc, NULL);

printf("%lx\n", t1);

pthread_join(t1, NULL);

pthread_create(&t2, NULL, threadFunc, NULL);

printf("%lx\n", t2);

pthread_join(t2, NULL);

}

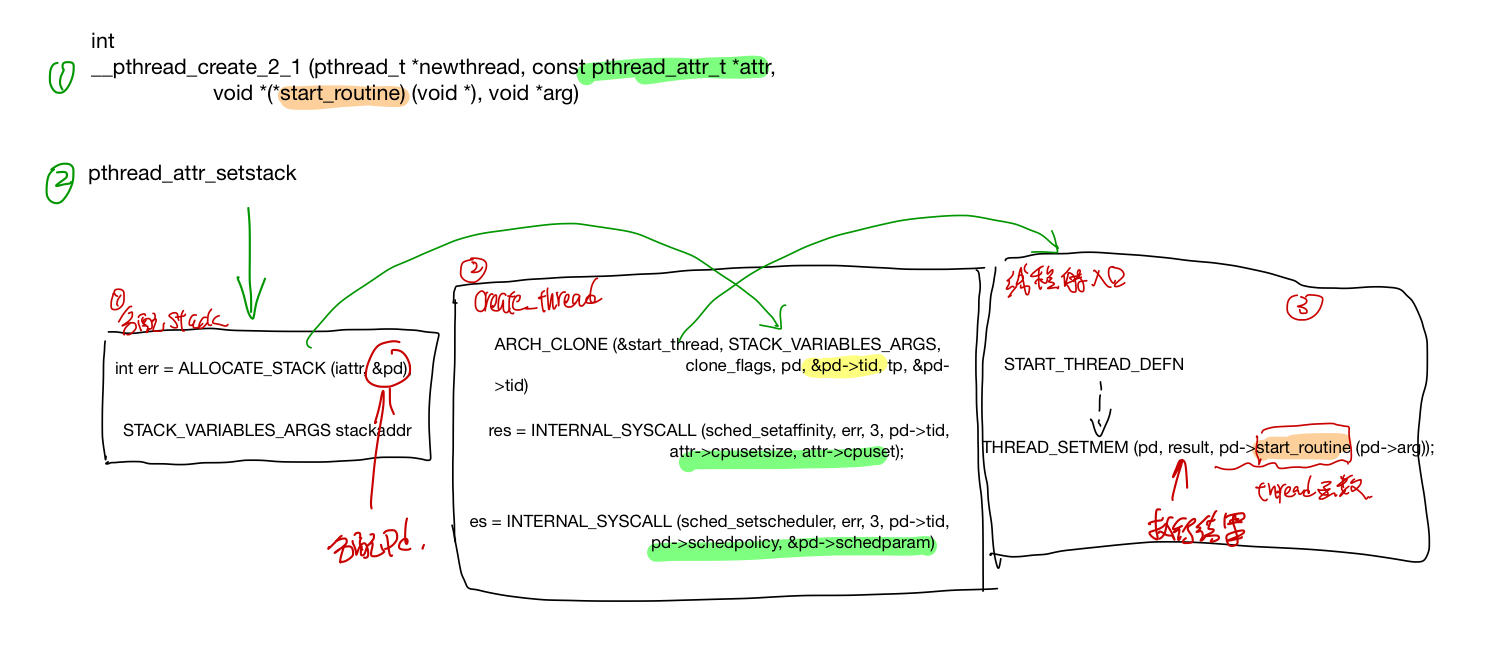

pthread_create

pthread create 首先分配线程的栈,并在这个栈上划出一片内存给pthread struct, 然后调syscall clone(2) 创建一个线程,创建的新的线程会从START_THREAD_DEFF 这个入口开始执行起来,最后线程的执行结果保存在pd->result里面, 用户可以通过pthread_attr_setstack来指定线程stack的内存,也可以直接使用系统的内存。

分配stack, 使用用户提供的stack或者系统分配一个stack(pd 这个struct也存放在stack里面了)

分配stack, 使用用户提供的stack或者系统分配一个stack(pd 这个struct也存放在stack里面了)

ALLOCATE_STACK(iattr, &pd)

create_thread 调用linux系统接口clone创建线程, 如果线程要指定在某个CPU上跑的话,调用sched_setaffinity设置好cpuset, 最后何止好调度策略和调度参数。

ARCH_CLONE(&start_thread, STACK_VARIABLES_ARGS, clone_flags, pd, &pd->tid, tp, &pd->tid)

INTERNAL_SYSCALL(sched_setaffinity, err, 3, pd->tid, attr->cpusetsize, attr->cpuset)

INTERNAL_SYSCALL(sched_setscheduler, err, 3, pd->tid, pd->schedpolicy, &pd->schedparam)

其中clone 的flags如下:

const int clone_flags = (CLONE_VM | CLONE_FS | CLONE_FILES | CLONE_SYSVSEM

| CLONE_SIGHAND | CLONE_THREAD

| CLONE_SETTLS | CLONE_PARENT_SETTID

| CLONE_CHILD_CLEARTID

| 0);

CLONE_THREAD, 标明是创建一个线程,和创建者同一个group, 同一个parent。

STACK_VARIABLES_ARGS对应着上一步ALLOCATE_STACK分配好的内存地址, 这块内存会作为新的线程的stack来用。

clone中的的start_thread就是线程的entry_point, 这个函数定义在nptl/pthread_create.c里面 START_THREAD_DEFF, 这个函数就是新创建的线程的入口。

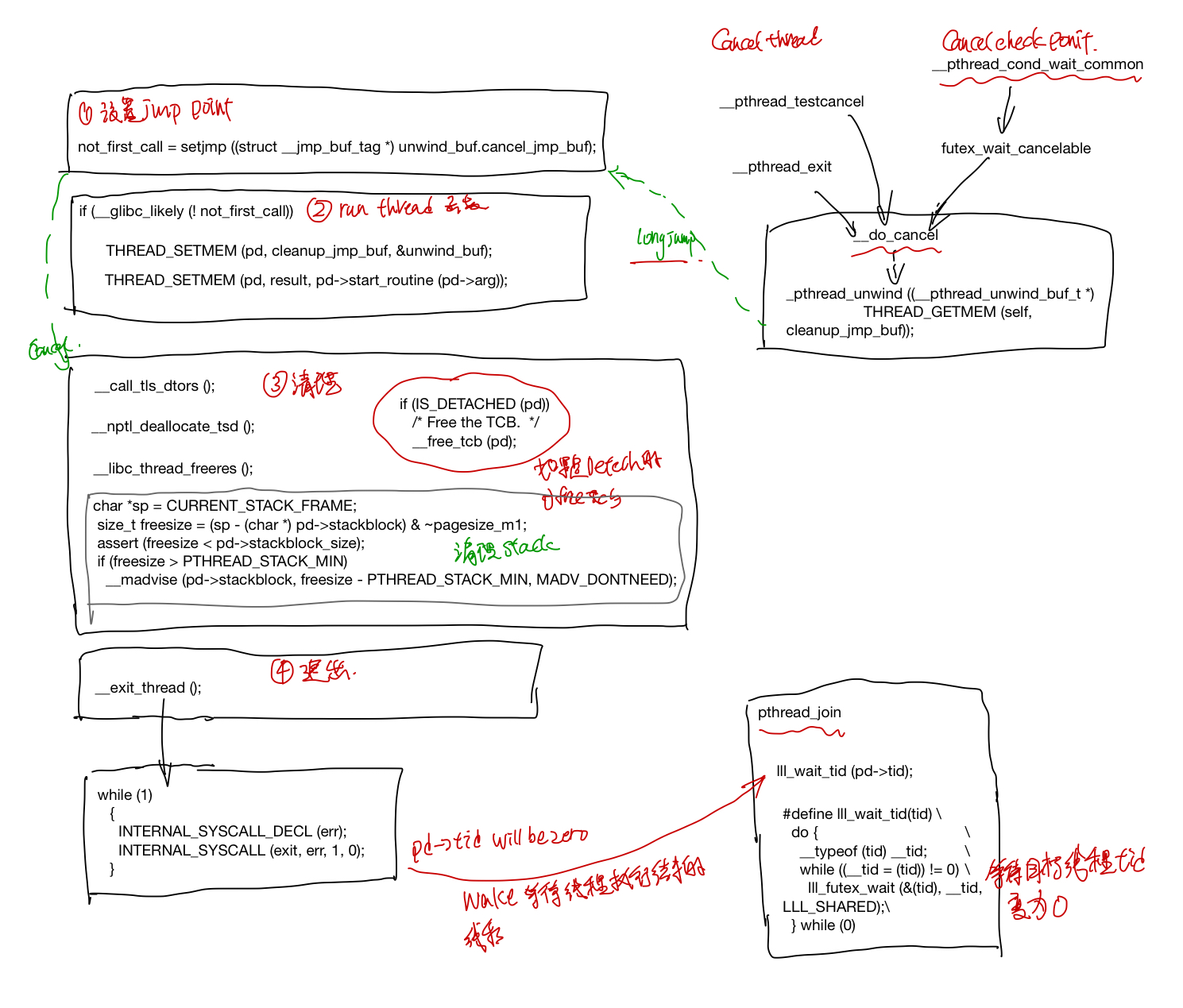

start thread

start thread是线程的入口, 在跑用户函数之前,会设置一个jmp point, 之后等线程执行结束的时候(调用pthread_exit, 或者线程被cancel掉的时候),会longjump 回到这个函数, 接着做线程执行完的清理工作。

如果线程是Deteched, 那么线程的pd结构就会被释放掉(因为pthread返回的status指针是保存在pd->result这个里面的),否则就要等pthread_join完之后释放掉。

最后线程exit_thread之后,会把pd中的tid设置为0,这样就可以唤醒等待join该线程结束的线程。

- 设置好unwind buffer, do cancel的时候可以跳回来

int not_first_call;

not_first_call = setjmp ((struct __jmp_buf_tag* ) unwind_buf.cancel_jmp_buf);

if (__glibc_likely (! not_first_call))

{

THREAD_SETMEM (pd, cleanup_jmp_buf, &unwind_buf);

setjmp和longjmp是非局部跳转函数, 它可以在在栈上跳过若干调用帧,返回到当前函数调用路径上的某一个函数中, 若直接调用则返回0,若从longjmp调用返回则返回非0值的longjmp中的val值。之后的do_cancel可能会longjmp到这个地方。

- 调用用户提供的函数, 结果存在

pd->result中

#ifdef CALL_THREAD_FCT

THREAD_SETMEM (pd, result, CALL_THREAD_FCT (pd));

#else

THREAD_SETMEM (pd, result, pd->start_routine (pd->arg));

#endif

- 做一些清理工作,清理TLS, 标记stack为可复用状态,如果线程是detached, 则释放pd struct的内存, 否则要在pthread_join里面释放这个pb struct, 如果一个线程既不是deteched,也没有线程在pthread_join等待他,这个pb struct就不会被释放,进入一个类似于zombile的状态。

__call_tls_dtors ();

/* Run the destructor for the thread-local data. */

__nptl_deallocate_tsd ();

/* Clean up any state libc stored in thread-local variables. */

__libc_thread_freeres ();

if (IS_DETACHED (pd))

__free_tcb (pd);

// mark stack resuable

char *sp = CURRENT_STACK_FRAME;

size_t freesize = (sp - (char *) pd->stackblock) & ~pagesize_m1;

assert (freesize < pd->stackblock_size);

if (freesize > PTHREAD_STACK_MIN)

__madvise (pd->stackblock, freesize - PTHREAD_STACK_MIN, MADV_DONTNEED);

// other code

__exit_thread ();

pthread_exit

猜测pthread_exit 的do_cancel的unwind会调用pthread_cleanup_push中注册的cleaup函数,最后会longjmp回到start_thread里面的setjmp那块,继续执行线程结束后的清理工作。

__pthread_exit (void* value)

{

THREAD_SETMEM (THREAD_SELF, result, value);

__do_cancel ();

}

do_cancel定义如下:

__do_cancel (void)

{

struct pthread* self = THREAD_SELF;

THREAD_ATOMIC_BIT_SET (self, cancelhandling, EXITING_BIT);

__pthread_unwind ((__ pthread_unwind_buf_t*)

THREAD_GETMEM (self, cleanup_jmp_buf));

}

pthread_join

pthread_join(t1, &result) 线程会调用lll_wait_tid等到t1执行结束,然后从t1的pd->result获取线程返回的结果, 返回给status,最后释放线程t1对应的pd sturct.

- 检查是否有死锁, 避免等待自己,以及正在被cancel的线程,

if ((pd == self

|| (self->joinid == pd

&& (pd->cancelhandling

& (CANCELING_BITMASK | CANCELED_BITMASK | EXITING_BITMASK

| TERMINATED_BITMASK)) == 0))

&& !CANCEL_ENABLED_AND_CANCELED (self->cancelhandling))

result = EDEADLK;

- 设置

t1->joinid = self;

/* Wait for the thread to finish. If it is already locked something

is wrong. There can only be one waiter. */

else if (__builtin_expect (atomic_compare_and_exchange_bool_acq (&pd->joinid,

self,

NULL), 0))

/* There is already somebody waiting for the thread. */

result = EINVAL;

- 等待t1线程执行结束, 这里的lll_wait_tid 最后会去调用linux提供的futex, 会被挂起来,一直等到t1的tid变为0。

/* Wait for the child. */

lll_wait_tid (pd->tid);

- free t1线程的pd struct

pd->tid = -1;

/* Store the return value if the caller is interested. */

if (thread_return != NULL)

*thread_return = pd->result;

/* Free the TCB. */

__free_tcb (pd);

pthread_detach

标记线程为detached, 把pd的jionid改为自己。

int result = 0;

/* Mark the thread as detached. */

if (atomic_compare_and_exchange_bool_acq (&pd->joinid, pd, NULL))

{

if (IS_DETACHED (pd))

result = EINVAL;

}

else if ((pd->cancelhandling & EXITING_BITMASK) != 0)

__free_tcb (pd);

return result;

pthread_cancel

pthread_cancel 只是把pd->cancelhandling的状态记为CANCLEING_BITMASK|CANCELED_BITMASK。

do{

oldval = pd->cancelhandling;

newval = oldval | CANCELING_BITMASK | CANCELED_BITMASK;

//other code

} while (atomic_compare_and_exchange_bool_acq (&pd->cancelhandling, newval,

oldval);

然后在pthread_testcancel的时候,才真正的调用do_cancel去cancel thread.

//pthread_testcancel --> CANCELLATION_P

if (CANCEL_ENABLED_AND_CANCELED (cancelhandling)) \

{ \

THREAD_SETMEM (self, result, PTHREAD_CANCELED); \

__do_cancel (); \

}

或者一些会check cancel point的调用比如pthread_cond_wait里面,会去检查这个标记,

pthread_cond_wait -->futex_wait_cancelable --> pthread_enable_asynccancel --> __do_cancel

futex_reltimed_wait_cancelable --> pthread_enable_asynccancel --> __do_cancel

sem_wait_common -> futex_abstimed_wait_cancelable --> pthread_enable_asynccancel --> __do_cancel

singal handle

Glibc的pthread实现代码研读 2: 线程同步

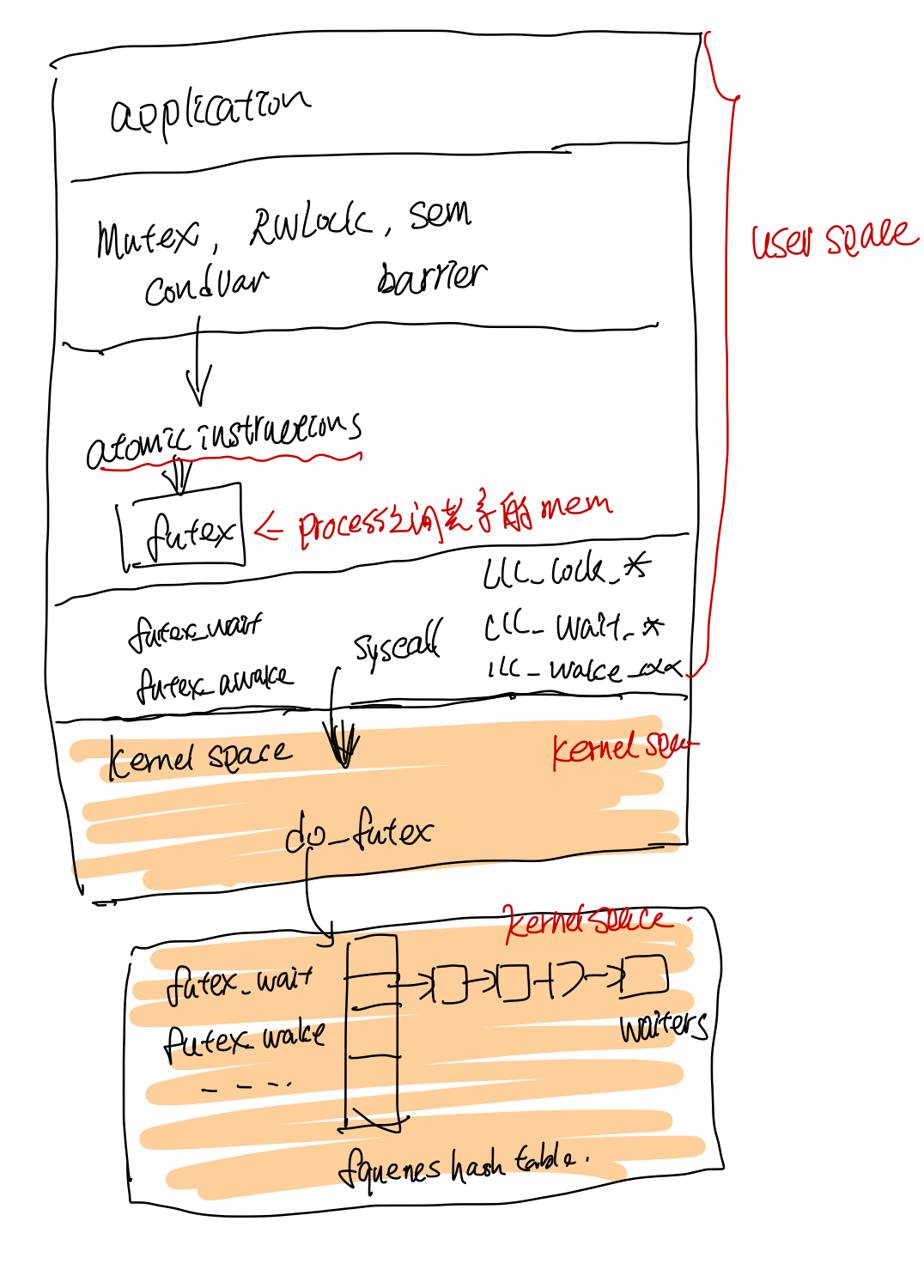

第二部分主要讲述pthread中的线程的同步方法包括mutex, sem, condition var, rwlock, barrier的实现,pthread使用了linux的futex来实现这些同步方法。

futex

pthread中的locks通过linux的futex(faster user space locking)实现, lock放在process之间的共享内存中, pthread通过atomic的指令来对这个lock进行dec, inc, load and test 等操作, 如果有竞态冲突的时候获取锁失败的时候,才会去sys call 调用linux底层的do_futex, 底层把线程放到futex对应的wait队列里面, 然后挂起线程等待被唤醒。

由于只有竞态冲突的时候才需要syscall, 其他情况都不需要,因此节省了很多sys call,这样比较快。

Mutex

xchgl 这个是atomic操作吧,失败了回去调用do_futex, flag 是FUTEX_WAIT

phtread_mutex_lock --> LL_MUTEX_LOCK --> ll_lock --> lll_lock_wait|lll_lock_wait_private --> xchgl

Sem

Condition var

Read write lock

Barrier

Yew

doc overview

Context

VNode

Scope

scheduler

wasm_bindgen

Callback

Axum

Router

Router数据结构

路由注册和分发

handlers

trait Handler

In axum a "handler" is an async function that accepts zero or more "extractors" as arguments and returns something that can be converted into a response.

extract: FromRequest

FromRequest用于将requestPart解析成各种类型,然后传个handler

#![allow(unused)] fn main() { #[async_trait] pub trait FromRequest<B = crate::body::Body>: Sized { /// If the extractor fails it'll use this "rejection" type. A rejection is /// a kind of error that can be converted into a response. type Rejection: IntoResponse; /// Perform the extraction. async fn from_request(req: &mut RequestParts<B>) -> Result<Self, Self::Rejection>; } }

比较常用的有Json, Query, MatchedPath

Json

Json会用serde_json::from_slice将request body bytes解析为对应的类型,用法示例如下:

#![allow(unused)] fn main() { #[derive(Deserialize)] struct CreateUser { email: String, password: String, } async fn create_user(extract::Json(payload): extract::Json<CreateUser>) { // payload is a `CreateUser` } let app = Router::new().route("/users", post(create_user)); async { axum::Server::bind(&"".parse().unwrap()).serve(app.into_make_service()).await.unwrap(); }; }

Query

Query会使用serde_urlencoded将query解析为相应的param, 用法示例如下:

#![allow(unused)] fn main() { fn app() -> Router { Router::new().route("/", get(handler)) } async fn handler(Query(params): Query<Params>) -> String { format!("{:?}", params) } /// See the tests below for which combinations of `foo` and `bar` result in /// which deserializations. /// /// This example only shows one possible way to do this. [`serde_with`] provides /// another way. Use which ever method works best for you. /// /// [`serde_with`]: https://docs.rs/serde_with/1.11.0/serde_with/rust/string_empty_as_none/index.html #[derive(Debug, Deserialize)] #[allow(dead_code)] struct Params { #[serde(default, deserialize_with = "empty_string_as_none")] foo: Option<i32>, bar: Option<String>, } }

Multipart: 文件上传

使用表单获取上传文件,其中form的enctype='multipart/form-data', 并

使用ContentLengthLimit 来限制文件大小。

#![allow(unused)] fn main() { async fn accept_form( ContentLengthLimit(mut multipart): ContentLengthLimit< Multipart, { 250 * 1024 * 1024 /* 250mb */ }, >, ) { while let Some(field) = multipart.next_field().await.unwrap() { let name = field.name().unwrap().to_string(); let data = field.bytes().await.unwrap(); println!("Length of `{}` is {} bytes", name, data.len()); } } async fn show_form() -> Html<&'static str> { Html( r#" <!doctype html> <html> <head></head> <body> <form action="/" method="post" enctype="multipart/form-data"> <label> Upload file: <input type="file" name="file" multiple> </label> <input type="submit" value="Upload files"> </form> </body> </html> "#, ) } }

tower.Layer

Layer 用来写中间件, Axum中提供了HandlerError中间件,可以指定一个函数,将handler的错误 转换为对应的response。ExtractorMiddleware可以将extractor转成middleware,如果extract 成功则继续执行,否则就提前返回。

#![allow(unused)] fn main() { pub trait Layer<S> { /// The wrapped service type Service; /// Wrap the given service with the middleware, returning a new service /// that has been decorated with the middleware. fn layer(&self, inner: S) -> Self::Service; } }

ExtractorMiddleware

#![allow(unused)] fn main() { #[async_trait::async_trait] impl<B> FromRequest<B> for RequireAuth where B: Send, { type Rejection = StatusCode; async fn from_request(req: &mut RequestParts<B>) -> Result<Self, Self::Rejection> { if let Some(auth) = req .headers() .expect("headers already extracted") .get("authorization") .and_then(|v| v.to_str().ok()) { if auth == "secret" { return Ok(Self); } } Err(StatusCode::UNAUTHORIZED) } } async fn handler() {} let app = Router::new().route( "/", get(handler.layer(extractor_middleware::<RequireAuth>())), ); }

HandleErrorLayer

HandleErrorLayer 可以使用闭包的函数将错误转换为相应的response,相应例子如下

问题:可以使用不同的HandlerErrorLayer堆在一起,每个处理自己相应类型的错误吗? 还是需要在这个地方统一来处理?

#![allow(unused)] fn main() { let app = Router::new() .route("/todos", get(todos_index).post(todos_create)) .route("/todos/:id", patch(todos_update).delete(todos_delete)) // Add middleware to all routes .layer( ServiceBuilder::new() .layer(HandleErrorLayer::new(|error: BoxError| async move { if error.is::<tower::timeout::error::Elapsed>() { Ok(StatusCode::REQUEST_TIMEOUT) } else { Err(( StatusCode::INTERNAL_SERVER_ERROR, format!("Unhandled internal error: {}", error), )) } })) .timeout(Duration::from_secs(10)) .layer(TraceLayer::new_for_http()) .layer(AddExtensionLayer::new(db)) .into_inner(), ); }

Response

Extensions

#![allow(unused)] fn main() { /// A type map of protocol extensions. /// /// `Extensions` can be used by `Request` and `Response` to store /// extra data derived from the underlying protocol. #[derive(Default)] pub struct Extensions { // If extensions are never used, no need to carry around an empty HashMap. // That's 3 words. Instead, this is only 1 word. map: Option<Box<AnyMap>>, } }

http::extentions::Extensions::get方法,根据typeid 获取对应的type.

#![allow(unused)] fn main() { pub fn get<T: Send + Sync + 'static>(&self) -> Option<&T> { self.map .as_ref() .and_then(|map| map.get(&TypeId::of::<T>())) .and_then(|boxed| (&**boxed as &(dyn Any + 'static)).downcast_ref()) } }

Examples

Cookies

OAuth

Multipart

Hyper

make_service_fn

Server

tokio

Executor

Executor中主要有Executor, TypedExecutor, enter, DefaultExecutor, Park

-

Executor,TypedExecutor主要作用是spawn future,转换为相应的任务,然后去执行该任务,不断的poll future,直到future complete. -

DefaultExecutor作用,是将tokio::spawn的future转给当前默认的executor. -

enter用于阻止在当前executor context中,再start一个executor -

park是对线程block/unblock操作的抽象.

原文如下(摘自tokio-executor/src/lib.rs)

-

The [

Executor] trait spawns future object onto an executor. -

The [

TypedExecutor] trait spawns futures of a specific type onto an executor. This is used to be generic over executors that spawn futures that are eitherSendor!Sendor implement executors that apply to specific futures. -

[

enter] marks that the current thread is entering an execution context. This prevents a second executor from accidentally starting from within the context of one that is already running. -

[

DefaultExecutor] spawns tasks onto the default executor for the current context. -

[

Park] abstracts over blocking and unblocking the current thread.

Executor impl

实现Executor接口的主要有current thread,task executor, default executor还有thread pool的executor.

DefaultExecutor

DefaultExecutor 扮演了入口的角色,会将spawn调用转发给thread local storage var的Executor;

current thread

current thread executor 是单线程的executor。task spwan和execute是在同一线程上完成的。

代码中Entered和Borrow的作用是干啥的不太明白,感觉这块代码有点绕.

Entered和Borrow定义如下:

#![allow(unused)] fn main() { /// A `CurrentThread` instance bound to a supplied execution context. pub struct Entered<'a, P: Park> { executor: &'a mut CurrentThread<P>, } }

#![allow(unused)] fn main() { /// This is mostly split out to make the borrow checker happy. struct Borrow<'a, U> { id: u64, scheduler: &'a mut Scheduler<U>, num_futures: &'a atomic::AtomicUsize, } }

thread pool sender

thread pool的sender使用future创建相应的task, 然后调用pool的submit_external提交任务

#![allow(unused)] fn main() { fn spawn( &mut self, future: Pin<Box<dyn Future<Output = ()> + Send>>, ) -> Result<(), SpawnError> { self.prepare_for_spawn()?; // At this point, the pool has accepted the future, so schedule it for // execution. // Create a new task for the future let task = Arc::new(Task::new(future)); // Call `submit_external()` in order to place the task into the global // queue. This way all workers have equal chance of running this task, // which means IO handles will be assigned to reactors more evenly. self.pool.submit_external(task, &self.pool); Ok(()) } }

Executor setup

thread local var EXECUTOR的设置过程

#![allow(unused)] fn main() { thread_local! { /// Thread-local tracking the current executor static EXECUTOR: Cell<State> = Cell::new(State::Empty) } }

在调用tokio::spawn时,会通过DefaultExecutor调用相应的Thread local storage中设置好的Executor

#![allow(unused)] fn main() { //tokio-executor/src/global.rs pub fn spawn<T>(future: T) where T: Future<Output = ()> + Send + 'static, { DefaultExecutor::current().spawn(Box::pin(future)).unwrap() } }

#![allow(unused)] fn main() { //tokio-executor/src/global.rs impl DefaultExecutor { #[inline] fn with_current<F: FnOnce(&mut dyn Executor) -> R, R>(f: F) -> Option<R> { EXECUTOR.with( |current_executor| match current_executor.replace(State::Active) { State::Ready(executor_ptr) => { let executor = unsafe { &mut *executor_ptr }; let result = f(executor); current_executor.set(State::Ready(executor_ptr)); Some(result) } State::Empty | State::Active => None, }, ) } } }

park

park是对当前线程block和unblock操作的抽象, 和std的park/unpark操作来比,在线程被blocked的时候,可以去调用一些定制化的功能。

Park impl

Reactor Park

Reactor 相关数据结构如下,

Par接口的park/unpark操作主要依赖于mio的poll和SetReadness。

Thread pool default park

线程池的default park主要依赖于croess beam的park和unpark

ParkThread

数据结构之间关系

接口调用关系

tokio thread pool

schedule

tokio 使用了crossbeam中的Queue, Stealer, Worker等来实现线程池,其中觉得有意思的地方时work stealing策略

每个task被分给worker的过程如下:有个pool.queue作为全局的task队列入口每次spawn task都会将task push到pool.queue中

worker run函数取task的逻辑如下:

- 从自己的worker队列中去取任务.

- 如果自己队列中没任务,则从全局队列中,获取一批任务。

- 如果全局队列中也没任务,则随机的从其他的worker中steal一批任务。

这样做的好处是,降低对全局队列的频繁加锁等操作,而且有steal机制,使得task可以比较均匀的被调度。

task spawn

task 从spawn到最后run的过程:

task wake

worker sleep

worker在sleep时候,会把自己push到pool的sleep_stack上, entry中的park/unpark负责线程的sleep和wake.

worker run

tokio driver

Driver 简单来说,就是io event事件触发后,找到相应等待的task, 然后调用预设好的回调函数.

tokio中事件驱动主要靠mio::poll, 在像mio::register中注册一个event时,会带上一个token(token是在tokio中生成的), driver根据该token建立到SchduleIo的映射,event触发的时候,就会调用schedulIo中预先定义好的方法。

然后事件被触发的时候,mio会把这个token带过来。

task <-> mio event

task和mio event通过token 建立关系,回调函数waker通过过Context包装, 传递给future的poll函数,当future需要等待某个事件时候,就会把事件和context关联起来。然后等事件被触发了,就调用context中预先设置好的waker.

主要数据结构

reactor::inner中的io_dispatch表,用于记录事件token到ScheduleIO的一个映射关系.

#![allow(unused)] fn main() { //reactor.rs pub(super) struct Inner { /// The underlying system event queue. io: mio::Poll, /// ABA guard counter next_aba_guard: AtomicUsize, /// Dispatch slabs for I/O and futures events pub(super) io_dispatch: RwLock<Slab<ScheduledIo>>, /// Used to wake up the reactor from a call to `turn` wakeup: mio::SetReadiness, } }

ScheduledIo, 主要用于指向context

#![allow(unused)] fn main() { pub(super) struct ScheduledIo { aba_guard: usize, pub(super) readiness: AtomicUsize, pub(super) reader: AtomicWaker, pub(super) writer: AtomicWaker, } }

Context 中的waker则定义了如何唤醒task, 对于threadpool 会去调用Task::Schedule方法,而对于current thread, 则会去调用Node.Notify

context 注册过程

首先ctx会在task run时候,被创建,然后传递给future_poll, 经过层层的poll_ready 之类的,注册到Reactor::inner::io_dipatch表中

注册的key会在Reactor::inner::add_source计算出来,然后传递给mio的register函数。

然后mio的poll函数在事件发生时,会将该token带上,在Reactor::dispatch中根据token找到相应的contex waker, 调用对应的wake函数。

thread pool 中 ctx waker的创建

#![allow(unused)] fn main() { //threadpool/task/mod.rs pub(crate) fn run(me: &Arc<Task>, pool: &Arc<Pool>) -> Run { //... let waker = task::waker(Arc::new(Waker { task: me.clone(), pool: pool.clone(), })); let mut cx = Context::from_waker(&waker); //... } }

其中Waker定义如下, event经过dispatch 后, 最终会调用Task::Schedule.

#![allow(unused)] fn main() { // threadpool/waker.rs impl ArcWake for Waker { fn wake_by_ref(me: &Arc<Self>) { Task::schedule(&me.task, &me.pool); } } }

current thread中ctx waker的创建

#![allow(unused)] fn main() { pub fn block_on<F>(&mut self, mut future: F) -> F::Output where F: Future, { // Safety: we shadow the original `future`, so it will never move // again. let mut future = unsafe { Pin::new_unchecked(&mut future) }; let waker = self.executor.scheduler.waker(); let mut cx = Context::from_waker(&waker); // ... other code } }

event, token, scheduleIO